AI is rapidly changing how filmmakers create their workflow, and a new technique will introduce motion to AI-generated Images.

Stable Diffusion, the company that has dominated AI-generated art from images gleaned from machine learning, is developing a new technique to create full-motion video from a single image.

“This state-of-the-art generative AI video model represents a significant step in our journey toward creating models for everyone of every type,” the company writes on its website.

The new generative video process created by Stability.AI is called Stability Video Diffusion, and it allows creators to upload a single image and then use text-based descriptions to tell the algorithm what video to create.

The algorithm can then generate a video that is about four seconds in length with a frame rate of between 3 and 30 frames per second.

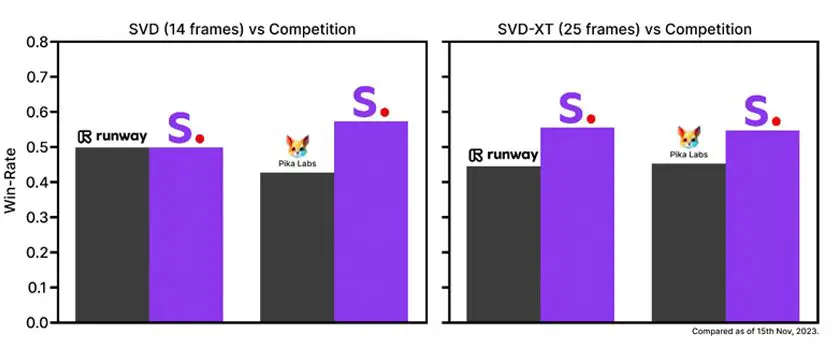

This translates into a video of either 14 or 25 frames at roughly 576×1024 pixel resolution, amounting to a little more than an animated GIF, however, when one considers that Stability Video Diffusion is just getting started, and the exponential pace with which AI-generated media is developing, it’s only a matter of time before more complex videos will be generated.

Also, the process is currently only using animated or drawn images and isn’t ready to create a photo-realistic video, but Stability.AI is confident that as the learns, it will be able to generate videos that are more realistic with smoother camera motion.

Image Credit – Stability.AI

Moreover, Stability.AI is working from a limited set of images that the company states are publicly available for research purposes, and with great concern being placed on the potential for plagiarism with generative AI, it’s a good idea to move slowly and make sure that the algorithms aren’t pulling any details or styles without permission.

Stability’s VP of Audio has already resigned over the use of copyrighted content to train AI models, and adding video to the mix could quickly spin things out of control.

Users can download the research preview source code for Stability Video Diffusion at Stabilitiy’s GitHub and Hugging Face portals.

The preview provides several models that can be animated and experimented with, with a step-by-step guide on Google Colab Notebook to test and optimize settings.

The white paper outlining technical details can also be downloaded.

[source: Stability.AI]

Disclaimer: As an Amazon Associate partner and participant in B&H and Adorama Affiliate programmes, we earn a small comission from each purchase made through the affiliate links listed above at no additional cost to you.

Claim your copy of DAVINCI RESOLVE – SIMPLIFIED COURSE with 50% off! Get Instant Access!