![]()

The machine-learning world just got stupider, and that’s a good thing.

With the breakneck speed at which artificial intelligence has been progressing, there have undoubtedly been some stumbles. One of the biggest issues has been the use of copyrighted materials to train AI models as well as images that may be inappropriate or raise privacy issues. But a technique referred to as “machine unlearning” developed by researchers at the University of Texas Austin may offer a solution to those concerns.

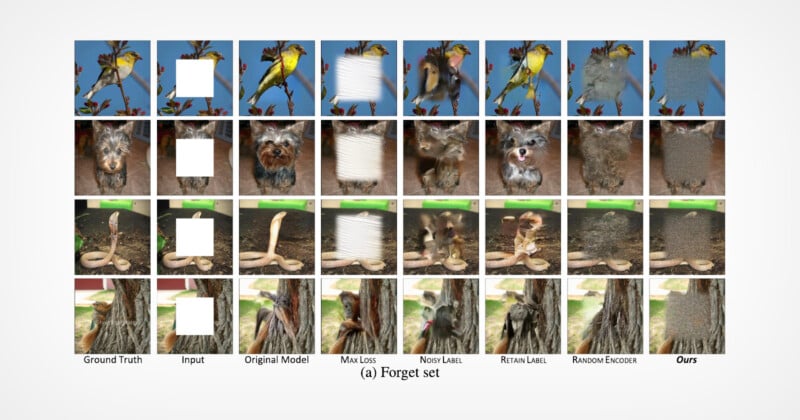

Machine unlearning allows a model to essentially “forget” specific content without the need to remove all the information as would typically be the case. According to the study, “we define a generative model as having ‘truly unlearned’ an image when it is unable to faithfully reconstruct the original image when provided with only partial information.” This illustrates a difference between simply removing the offending data, which could leave its information without the learning model.

“When you train these models on such massive data sets, you’re bound to include some data that is undesirable. Previously, the only way to remove problematic content was to scrap everything, start anew, manually take out all that data and retrain the model. Our approach offers the opportunity to do this without having to retrain the model from scratch,” Radu Marculescu, a leader on the project and a professor in the Cockrell School of Engineering’s Chandra Family Department of Electrical and Computer Engineering, tells UT News.

While the idea of machine learning is still relatively new, issues with the type of content used to train AI models have repeatedly cropped up. Copyright concerns have dogged AI companies with everyone from artists posting their content online to the New York Times calling out data misuse.

And with laws slowly catching up to technology, what is and isn’t permissible continues to evolve. For example, just this week, the White House announced new regulations for federal government agencies.

“If we want to make generative AI models useful for commercial purposes, this is a step we need to build in, the ability to ensure that we’re not breaking copyright laws or abusing personal information or using harmful content” says Guihong Li, a graduate research assistant who worked on the project.

Image credits: Machine Unlearning for Image-to-Image Generative Models