Time flies, and artificial intelligence models keep getting better. We witnessed it in spring when Midjourney rolled out its updated Version 5, which blew our minds with its unbelievable photorealistic images. As predicted, Adobe didn’t lag behind. Apart from announcing several upcoming AI tools for filmmakers, the company also launched a new model for their text-to-image generator. In Adobe Firefly Image 2, developers promise better people generation, improved dynamic range, and some new features like negative prompting. After testing it over a period of time, we’re eager to share our results and thoughts with you.

The new, deep-learning model Adobe Firefly Image 2 is now in beta and available for testing. In fact, if you tried out its predecessor, you can use the same link. It will open the updated image generator by default. If not, you can sign up here.

To get a hang of how Firefly works, read our detailed review of the previous AI model. In this article, we will skip the basics and concentrate on what’s new and what has changed specifically in Adobe Firefly Image 2.

Photographic quality and generation capabilities

So, the main question is can the updated Firefly finally create realistic-looking people? As you probably remember, the previous model struggled with photorealism even when you specifically chose “photo” as your preferred content type. For example, this was the closest I got to natural looking faces last time:

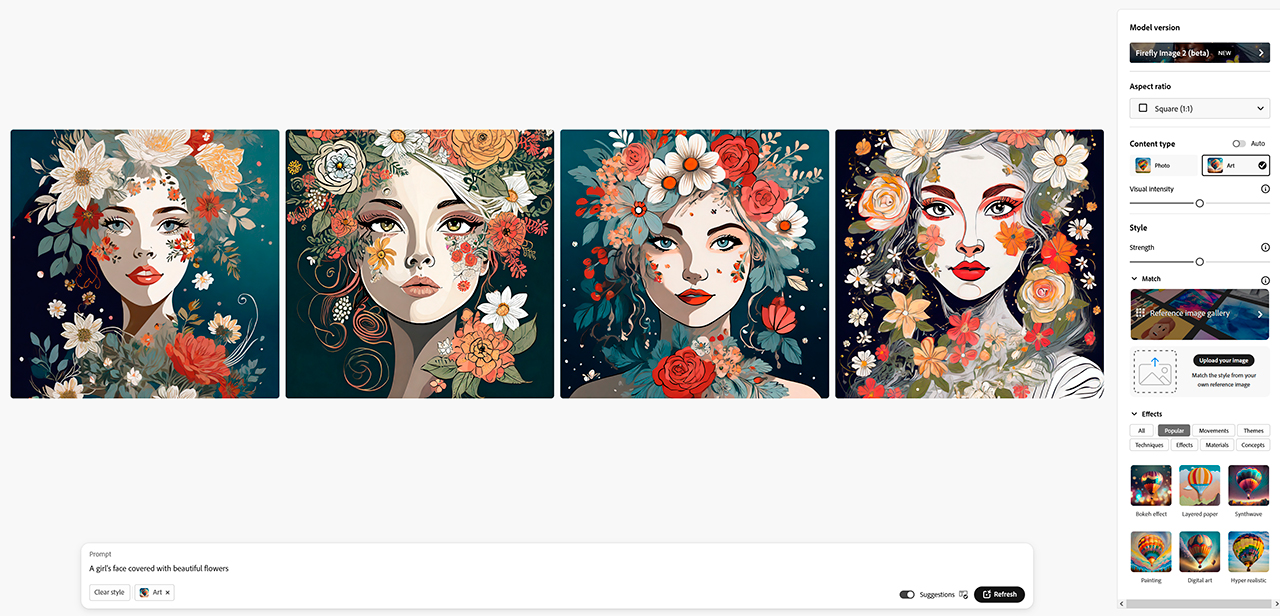

Why don’t we take the same text prompt and try it out in the latest Firefly version? I must mention here if you don’t specify whether you want AI to generate “photo” or “art” in the parameters, the artificial brain will automatically choose what seems most logical. That’s why the first results with my old prompt were illustrations:

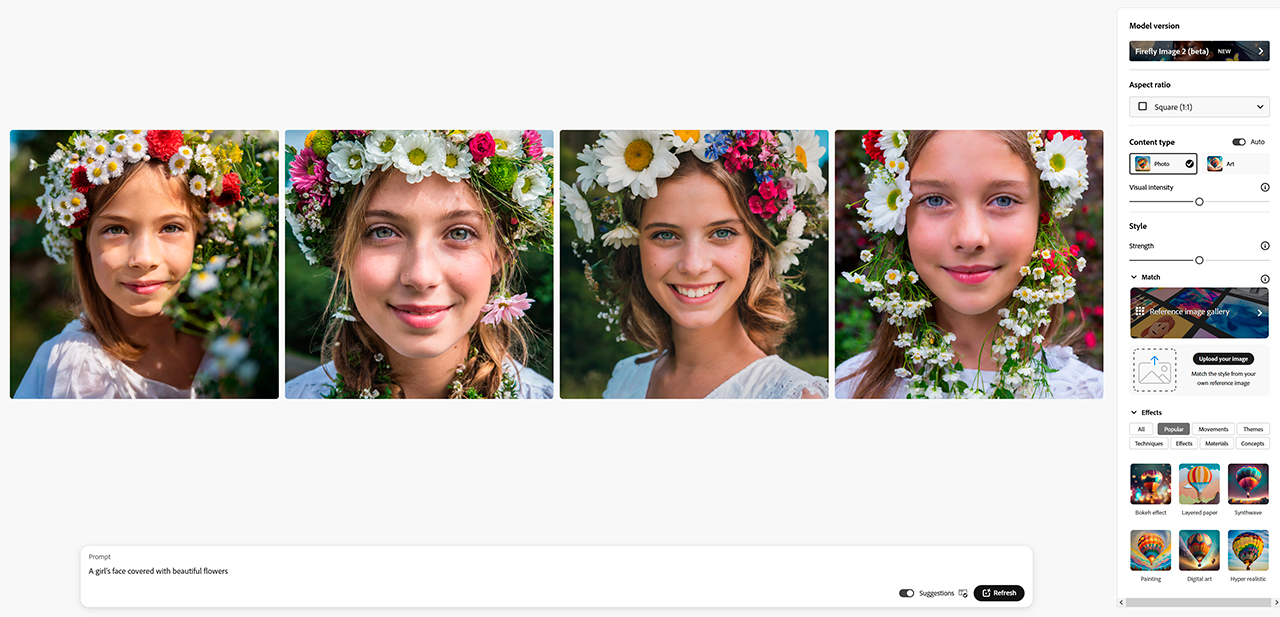

Looks nice and creative, right? However, not what we were trying for. So, let’s try again. Here is a raster with four pictures Adobe Firefly Image 2 came up with after I changed the content type to “photo”:

Wow, that’s definitely an improvement! The announcement from developers stated that the new Firefly model “supports better photographic quality with high-frequency details like skin pores and foliage.” These portrait results definitely prove them right.

The flip side of the coin

However, perfection is a myth. While the previous model couldn’t make a picture look like a photo, this one doesn’t seem to have “an imagination”. If you compare the results above, you will see that Adobe Firefly Image 2 put little to no flowers directly on the faces. Apparently, it would feel too unreal. Yet, it was the main idea behind the image I had visualized, so the old neural network was more to the point.

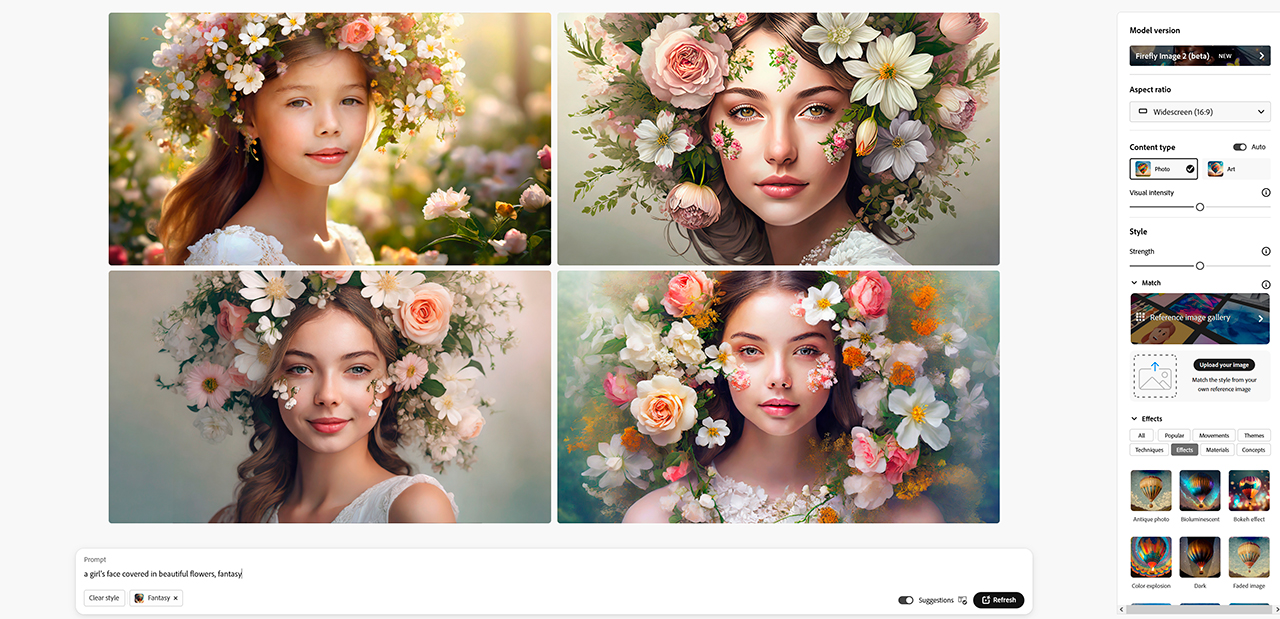

If you want to also create something rather dreamy, try playing with your text prompt and settings. For example, I added the word “fantasy” and changed the style to “otherworldly”. Those iterations brought me a slightly better match for my original concept:

What bothers me more though is a sudden occurrence of the bias problem. Do you notice that almost all these women (even the illustrated ones) have European facial features, green or blue eyes, and long blonde or light-brown hair? Where did diversity go? The first image generator by Adobe constantly generated all kinds of appearances, races, skin colors, etc. This one, on the contrary, sticks to one category.

Not to mention that different experiments with new settings and features delivered different results, and not all of them worked smoothly. For instance, here is an Adobe Firefly Image 2’s attempt to picture children playing with a kite on the beach at sunset:

Photorealistic as hell, I know.

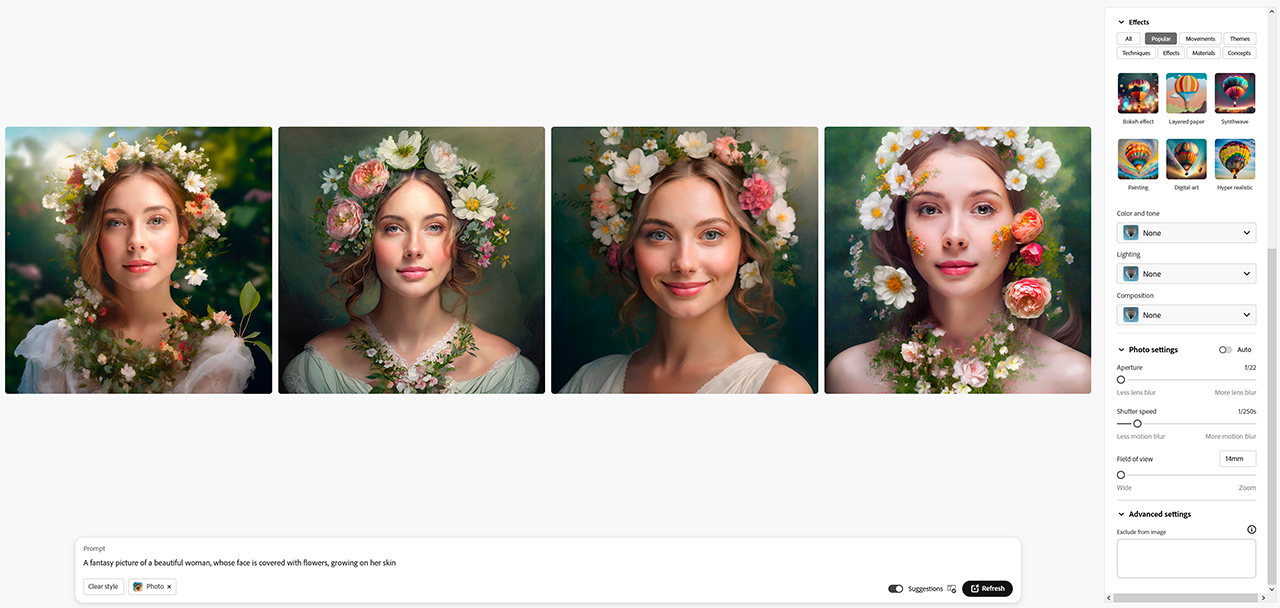

Photo settings in Adobe Firefly Image 2

The new photo settings feature sounded very appealing in the press release, especially for content creators and filmmakers who use image generators for, say, making mood boards. It includes changing the key photo parameters we all are familiar with – aperture, shutter speed, and field of view. The last one refers to a lens, which you can now specify by moving a little tumbler. It is also the only setting that somehow worked in my experiments. Here you see a comparison of results with a 300mm vs 50mm camera lens:

At least there’s a subtle change, right? However, I can’t confirm the same for the aperture setting. Even when the description promised “less lens blur”, the results provided a low depth of field regardless.

So, the idea of manually controlling the camera settings of the image output sounds fantastic, but we’re not there yet. When this feature starts running like clockwork, this will no doubt be reason enough to switch to Adobe Firefly, even from my favorite, Midjourney.

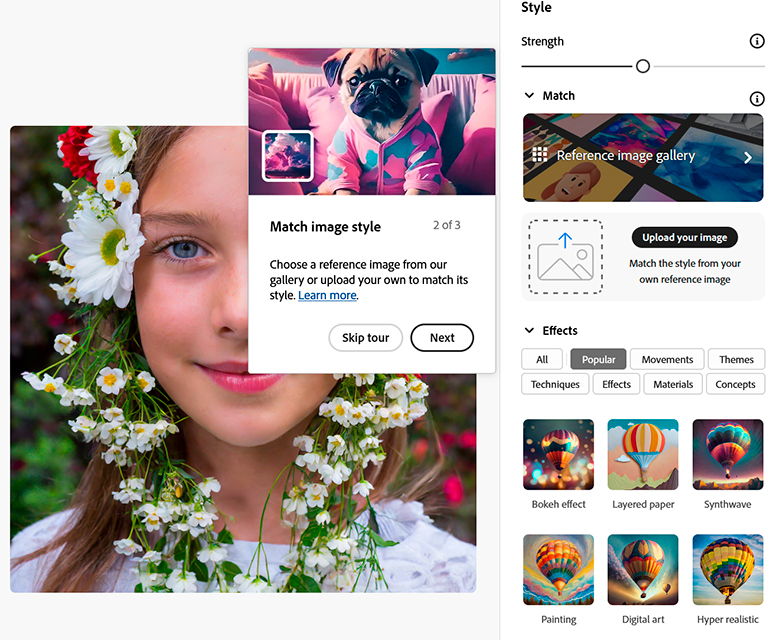

Using image references to match a style

Another new feature Adobe introduced is called “Generative Match”. It allows users to upload a specific reference (or choose from a preselected list) and transfer the style of it onto the generated pictures. You will find it on the sidebar along with other settings:

My idea was to create a fantasy knight in a sci-fi style using the gorgeous lighting and color palette from “Blade Runner 2049”. The first part of this task went quite well:

However, when I tried to upload the Denis Villeneuve film still, Firefly warned me that:

To use this service, you must have the rights to use any third-party images, and your upload history will be stored as thumbnails.

Sounds great, especially because a lot of people forget to attribute the initial artists whose pictures they use for reference. So, I changed my plan and used a film still from my own sci-fi short instead. Below you see my reference and how Firefly processed it, matching the style of the knight image results to its look and feel:

Not bad! Adobe Firefly Image 2 replicated the colors, and you can even see the grain from my original film still. Also, AI unexpectedly got rid of the helmet to show the face of my knight. So, it tries to match the style as well as the content of your reference.

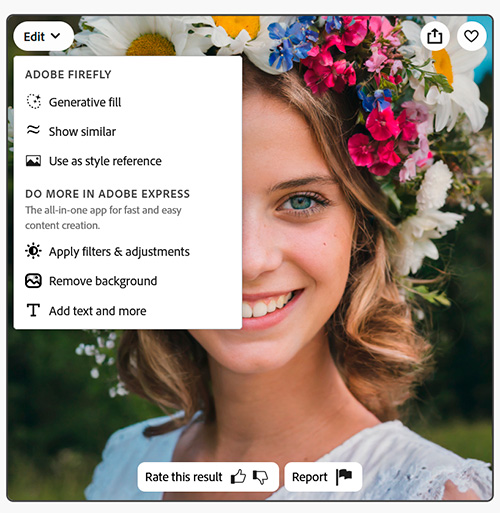

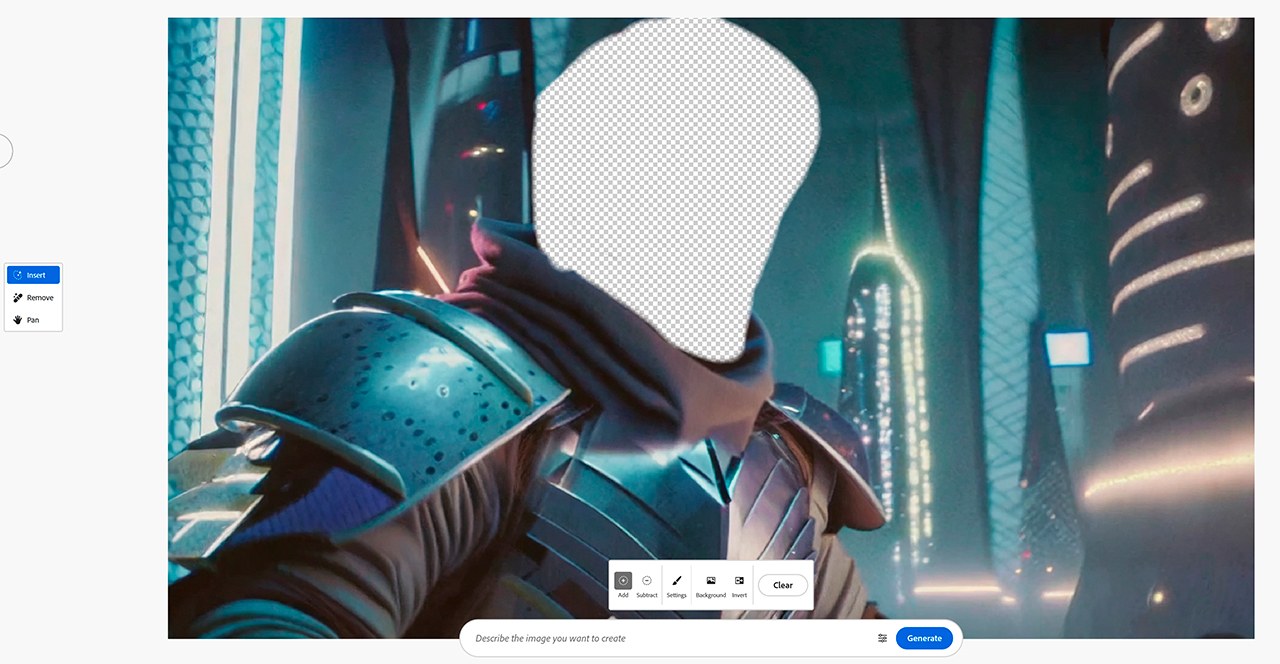

Inpainting directly in your results with Adobe Firefly Image 2

Let’s say I like the new colors but don’t want to see the face of the knight from my previous example. Would it be possible to fix it with Adobe’s Generative Fill? Sure, why not, as the AI upgrade allows us to apply the Inpaint function directly to generated images without leaving the browser:

Generative Fill is a convenient tool you can use with a simple brush (just like in Photoshop Beta) to mask out an area of the image you don’t like. Afterward, either insert the new elements with a text prompt or click “remove” to let AI come up with content-aware-fill.

To achieve a better result, I marked a slightly bigger area than I needed. (In the first attempts, the size of the helmet was too small in proportion). Several runs later, Firefly generated a couple of decent results, so this experiment was successful:

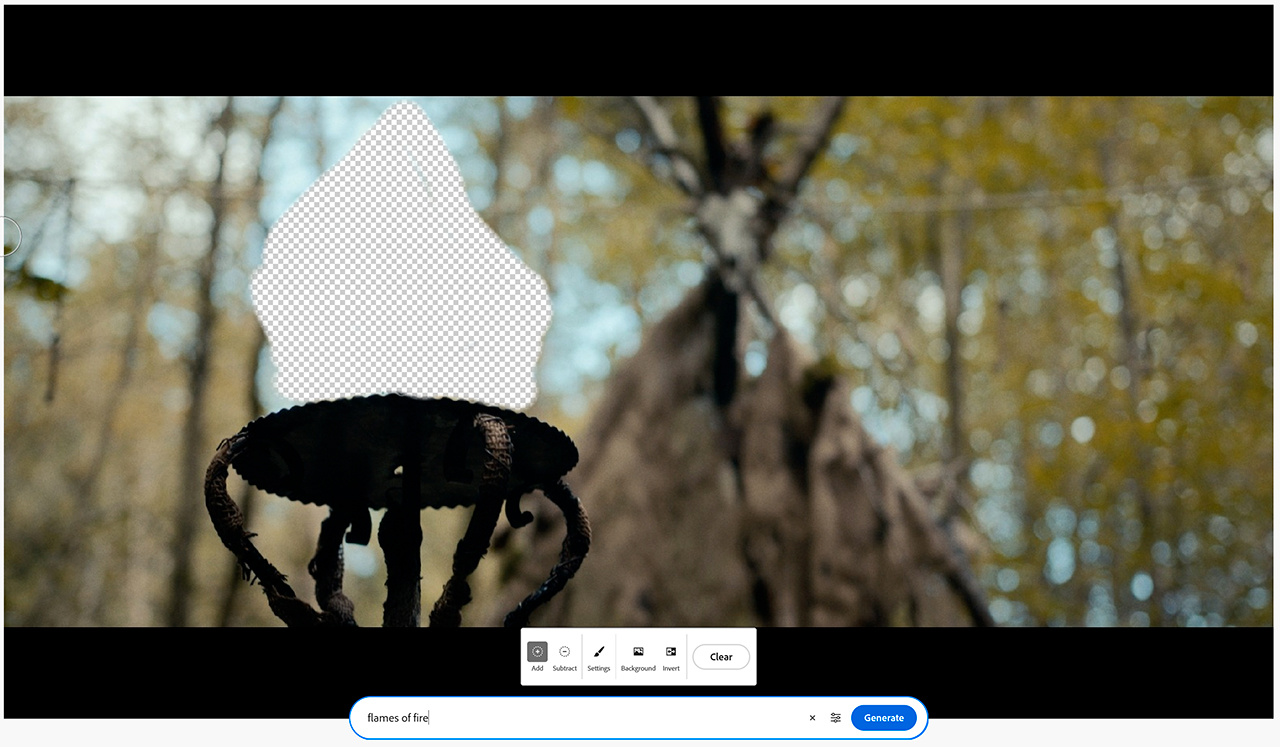

You can now also alter your own images in the browser without downloading Photoshop (Beta). You can test the Generative Fill magic yourself here. I played with the removal feature and made a very realistic-looking flames visualization for an upcoming SFX shot using a real photo from our location.

Negative prompting

At this point, it only made sense to include the addition of negative prompting. As with other image generators, now you can add up to 100 words (English only) that you want Adobe Firefly Image 2 to avoid. For that, click “Advanced Settings” on your right and type in specific, no-go terms using the return key after each word.

Developers recommend using this feature to exclude such glitches and sudden appearances like “text”, “extra limbs”, “blurry”, etc. I tried it with another concrete example. To start with, I created an illustrated picture of a cat catching fireflies in the moonlight.

The results are very lovely, but naturally, the artificial intelligence put the image of the moon in each and every picture. My idea, on the other hand, was only to recreate the soft bluish lighting. That’s why I tried to get rid of the Earth’s natural satellite by adding “moon” in the negative prompting field.

Okay, it only worked in one out of four results, and unfortunately, not in the most appealing one. Still, better than nothing. Hopefully, this feature works better with undesired artifacts like extra fingers or gore.

Attribution novice

When you decide to save your results, Adobe Firefly Image 2 warns you that it will apply Content Credentials to let other people know your picture was generated with AI. In case you missed it, Adobe even created their own symbol for such purposes.

I was happy to hear that because firstly, this symbol doesn’t resemble a big red watermark “not for commercial use”, which the previous AI model stamped on each picture. Secondly, it is also a big step towards distinguishing real content from created content. Finally, Adobe’s tool even promises to indicate in the credentials when the generated result uses a reference image.

The only problem is: where is it? Scroll through my article again. At this point, you will find at least 5 images generated by Firefly and downloaded in their full size (feature one, for example). Do you see any content credentials? What about a small “Cr” button? Neither do I. So, why do they announce it whenever you try to save a picture? Is it a bug or am I so special?

Price and availability

To get full access to all of Adobe’s AI products, you just need any Creative Cloud subscription. The type of subscription determines the number of image generations you can perform. Free users with an Adobe account but no paid software receive 25 credits to test out the AI features, with each credit representing a specific action, such as text-to-image generation. You can read more about the different pricing models here.

Adobe Firefly Image 2 is in web-based beta, but the developers promise to include it in Creative Cloud apps soon.

Have you tried the upgraded model yet? What do you think about it? Which added functions work well and which don’t, in your opinion? Let’s exchange best practices in the comment section below!