The AI chatbot race is in full swing. Now known as Google Gemini 1.0 Ultra, we first reviewed the Google’s latest LLM when it was still called Bard. In addition to the name change, Gemini brings many improvements to the table, such as a more approachable interface, longer possible queries (1,000 times longer!), and better coding results. At $19.99 per month for an Advanced subscription, Gemini is priced in line with competitors ChatGPT Plus and Microsoft Copilot Pro (which charge $20 per month), but it’s the most capable and visually arresting chatbot we’ve tested. That’s enough to earn it our Editors’ Choice award in the fast-growing AI chatbot category.

What Is Google Gemini 1.0 Ultra?

Gemini 1.0 Ultra is Google’s generative AI tool, which the company released in February 2024, just months after OpenAI’s November 2023 announcement of ChatGPT 4.0 Turbo. Google executives issued an “internal code red,” speeding up the release, over the competitive threat of ChatGPT’s new way to search information—Google’s bread and butter. However, that race has not come without peril. Over the course of its public release, the new Gemini 1.0 Ultra model, or Gemini Advanced as Google is now calling it, has struggled with modeling and image generation issues.

Gemini is a large language model (LLM), just like ChatGPT and the AI included in Microsoft Copilot. If you ask Gemini a question (“What is the most popular Thanksgiving dish?”) or a request (“Make me a unique stuffing recipe, incorporating chestnuts”), it combs through a massive body of data to instantly return an answer with complete sentences that mimic human language.

(Credit: Google)

The AI model behind the version of Gemini I had access to was 1.0 Ultra. 1.0 Ultra represents a big jump over the former PaLM2 model in both capability and context length. By how much? Well, for reference, Google’s previous LLM Bard had the capacity for around 1,000 tokens per request, while the subscription-level ChatGPT 4.0 can handle up to 128,000. Gemini 1.0 Ultra, and eventually 1.5 Pro? One million and 10 million, respectively.

This means you can input far more text into a single request, allowing for more granularity and specificity, as well as longer input/return on coding evaluations, image generation, and more.

How Much Does Google Gemini 1.0 Ultra Cost?

Google, much like OpenAI, offers its current chatbot in two flavors—free and premium. Anyone with a Google account will have immediate access to Gemini, but Gemini Advanced is locked behind a $20 monthly subscription (like ChatGPT Plus and Microsoft Copilot Pro). Right now, Google is running a promotion where you can try Gemini Advanced for 60 days for free before your card is charged.

(Credit: Google)

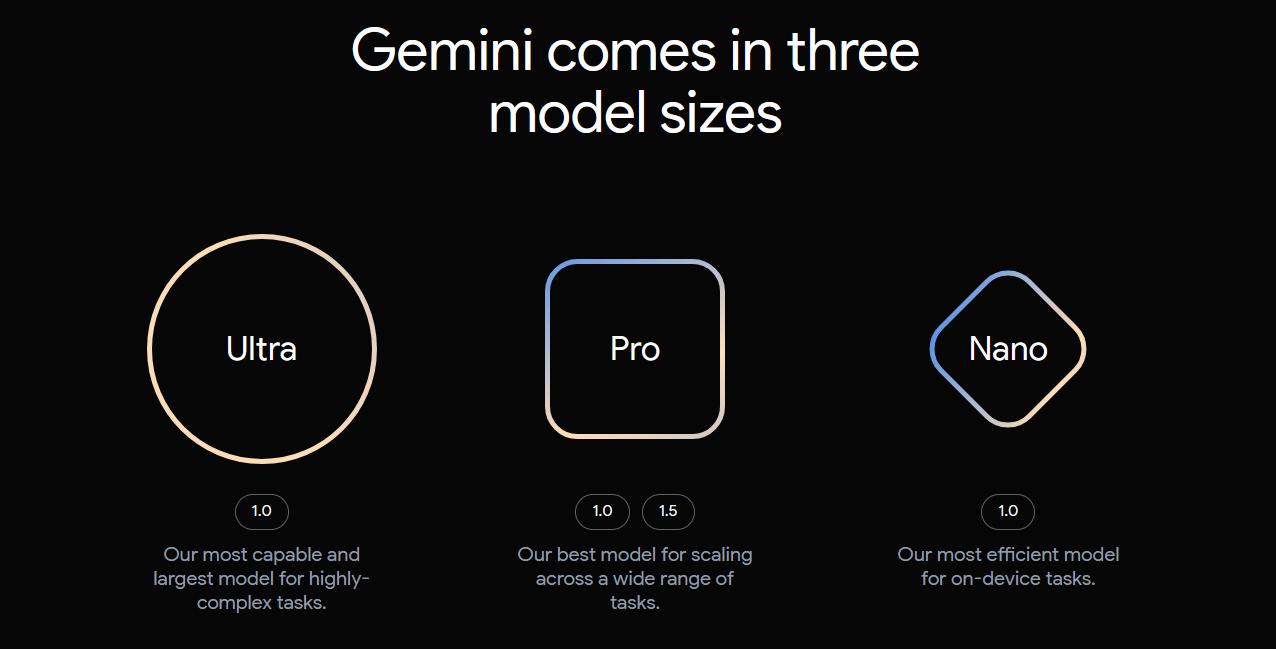

The subscription gets you access to what Google calls its 1.0 Ultra model, its “most capable,” as opposed to the free 1.0 Pro model, which the company says is better positioned for “everyday needs.” Of course, the differences between each model’s capabilities could fill textbooks of machine learning instruction.

There’s also the developer-focused Gemini 1.5 Pro model, which was released to the public on April 9th of this year, and finally, the Gemini 1.0 Nano model, which is designed to run locally on-device without any internet connection. Neither 1.5 Pro nor 1.0 Nano is in public release yet; however, I plan to evaluate them as soon as they’re available. All testing below is based on the most recent Gemini 1.0 Ultra model.

How Do You Use Google Gemini?

You can access Google Gemini at gemini.google.com. It requires a Google account (either a personal one or a Workspace account). This website is distinct from the core Google.com search page, so you have to remember to pull it up on its own. Gemini does not have a mobile app at this time, although you can access it on a mobile browser.

A chat function dominates the simple interface. Once you start entering prompts, the screen fills with Gemini’s responses, which are sometimes quite long. You can ask follow-up questions. On the top-right side, a limited menu offers Use Dark Theme (pictured below), Help, and FAQ options, as well as chat-specific features like Reset Chat and See History.

(Credit: Google)

Since Gemini is available on Chrome, it’s a good choice for a default chatbot if you already use that browser. You can also use Gemini on Edge.

Gemini (free version) works in 250 countries now, and Gemini Advanced works in over 180 [RECENCY UPDATE].

Google’s AI Is a Work in Progress

Ultra is more powerful than the previous version and claims to be more powerful than GPT 4.0, which powers ChatGPT. OpenAI, which owns ChatGPT, and Google are both secretive about their models, so the public knows little about them.

Google maintains that Gemini is still an “experiment.” A tiny disclaimer below the chat function reads, “Gemini may display inaccurate or offensive information that doesn’t represent Google’s views.” However, the company is continually investing in it, most recently giving it the ability to execute code on its own to improve its logic and reasoning. At this point, the “experiment” label mostly serves as a general caveat stating that Google will not held liable whenever Gemini returns something nonsensical or, worse, unethical. As the statement below shows, Google makes no bones about stating that Gemini is going to get things wrong.

(Credit: Google/PCMag)

Though I won’t repeat everything in the story linked to in the previous paragraph, the gist is that Gemini’s image generation was overcorrecting on just about everything imaginable. This included making the Founding Fathers of America look as though they were black or Native American men and, when asked to “generate a photo of vanilla ice cream,” would instead only return images of chocolate, as you can see in the image below.

Google has overcorrected its hallucinations in the past. (Credit: Google/PCMag)

In response to the snafu, Google CEO Sundar Pichai told Google staff in a note:

“I know that some of its responses have offended our users and shown bias—to be clear, that’s completely unacceptable, and we got it wrong,” Pichai wrote. “We’ll be driving a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust evals and red-teaming, and technical recommendations.”

Image generation is functional again after a brief hiatus. But as we’ll explore below, what the word “functional” actually means is clearly subjective.

Image Generation and Story Context Benchmark: Gemini 1.0 Ultra vs. ChatGPT 4.0

For my first test, I tried to push both the image generation and creative limits of LLMs with the following prompt:

“Generate me a six-panel comic of Edo period Japan, but we’re going to spice it up. First, change all humans to cats. Second, there are aliens invading, and the cat samurai needs to fight them off! But give us a twist before the end. Communicate all of this visually without any text or words in the image.”

This benchmark always seems to return misconstrued (or often hilariously off-base) images no matter which image generator you’re using, and the results were no different here. Furthermore, the prompts we’re using are specifically designed to confuse LLMs and push their contextual understanding to the max.

While generators like Gemini are fine for simple graphic design and can handle detailed instructions for a single panel without issue, the best test of their might is their ability (or inability, in many instances) to translate natural human language into multiple images at a time.

On to the results: As shown below, GPT seems to have interpreted the idea of a twist by—in addition to traditional big-headed aliens—something that looks surprisingly similar to the Xenomorph in Alien. (It did manage to get the alien in question in before the end as requested, though it was a four-panel comic, not six.)

I’ll leave it to you to decide whether or not you’re looking at a copyrighted character or not. But from where I’m sitting, all you need is a longer head, and you’ve got a Xenomorph hanging out in Edo-period Japan. With the discussion around ownership, copyright, and even the basics of what it means to be an artist heating up in the AI space over the past six months, returns like these are both concerning and par for the course.

(Credit: OpenAI/PCMag)

For example, last month, a reporter from the Wall Street Journal got exclusive access to ChatGPT’s upcoming text-to-video AI generator, SoraAI. In one of those prompts, the reporter simply asked for the AI to return a mermaid sitting underwater with a crab—which, to me, was calculated to get it to return copyrighted Little Mermaid materials.

(Credit: The Wall Street Journal)

SoraAI did seem to violate copyright, but, as you can see in the image above, it threw in a SpongeBob SquarePants curveball instead, stealing from Paramount Studios (which owns Nickelodeon) rather than Disney. All the journalist had input into SoraAI was “a crab,” just as all I entered into ChatGPT was “aliens” (note the lack of capital letters). When directly asked whether or not SoraAI was trained on YouTube, OpenAI CTO Mira Murati gave the unsatisfying answer that SoraAI is “only trained on publicly available data.” It’s unsatisfying because movies and TV shows are publicly available data, and there are clips from both Alien and SpongeBob SquarePants on YouTube.

Gemini’s returns, meanwhile, were copyright-free, but images like the misshapen cat below will assuredly give me nightmares well after this review is published.

The cat samurai Gemini generated is borderline terrifying. (Credit: Google/PCMag)

While on some level, ChatGPT understood the basics of the assignment, no part of Gemini’s response was coherent or even something I’d want to look at in the first place. Take anything more than a quick glance at the image below from Gemini to see what I mean.

(Credit: Google/PCMag)

So, though ChatGPT ultimately wins for image clarity and somewhat better contextual understanding, it might be committing copyright violations in the meantime. Companies responsible for these AIs seem comfortable playing fast and loose with the concept of digital ownership, and until these issues are sorted out in the courts—almost definitely of a Supreme variety—we wouldn’t recommend using these tools to generate any images or videos that would be either public-facing or used for private profit. Think marketing materials, sales brochures, or anything that may not pass the sniff test once the courts inevitably pass judgment on the legality of AI image- and video-generation tools in the coming years.

Image Recognition Benchmark: Gemini 1.0 Ultra vs. ChatGPT 4.0

Both GPT and Gemini recently updated their LLMs with the ability to recognize and contextualize images. I haven’t found a main use case for consumers or even that many working professionals yet; however, it’s still a function of the service that bears testing regardless.

For a bit of cheeky fun, I decided my benchmark would mimic the famous mirror self-recognition (MSR) tests scientists run on animals to test their cognition and intelligence levels.

(Credit: Google/PCMag)

Though the picture I asked the LLMs to evaluate (above) looks like any generic server farm, it’s specifically picturing a server farm running an LLM. On a precision level, we’ll say both chatbots got 99% in this test, perfectly describing every element in the picture with detail.

Thankfully, neither seemed to understand the last 1% of the image, that they were actually looking at a picture of themselves generating the answer.

How Does Google Gemini 1.0 Ultra Handle Creative Writing?

One aspect of creative writing that LLMs famously struggle with in tests is the idea of twists. Often, what it thinks users can’t see coming are some of the most obvious tropes that have been repeated throughout media history. And while most of us who watch TV or movies have the collective sum of those twists stored in our heads and can sense the nuance of when something’s coming, AI struggles to understand concepts like “surprise” and “misdirection” without eventually hallucinating a bad result.

So, how did Gemini fare when I asked it to give a new twist on the old classic switcheroo, Little Red Riding Hood? Here’s the prompt, followed by the output:

“Write me a short (no more than 1,000 words), fresh take on Little Red Riding Hood. We all know the classic twist, so I want you to Shyamalan the heck out of this thing. Maybe even two twists, but not the same ones as in the original story.”

For starters, let’s get one thing clear: Neither GPT nor Gemini will be replacing M. Night Shyamalan anytime soon, and I’ll leave it up to you to decide how to feel about that. The stories were not only objectively bad, but they were also exactly what I prompted them not to be: predictable. In Gemini’s case, it actually told me what the twist was going to be in the second paragraph of a 1,000-word test story.

From what I can gather, it seemed to be trying some kind of Chekhov’s Gun misdirection but tripped over the specific instructions to give the story a twist.

(Credit: Google/PCMag)

I won’t copy and paste the whole story for context, but think of it like this: 20 minutes into The Sixth Sense, someone stares directly down the barrel of the camera and talks about how long it’s been since anyone has seen Bruce Willis’ character around. Sure, Gemini didn’t technically reveal the twist, but come on.

That said, it was more verbose and action-packed than GPT’s story, which was almost insufferably saccharine by the end. GPT also misunderstood the assignment of a “twist.” First, the prompt asked for a double-twist, which is a less often-used trope but still clever when pulled off properly.

(Credit: OpenAI/PCMag)

ChatGPT 4.0 sort of included one twist, that being that the Wolf in the story is actually a guardian of the forest, but stumbled on the second. Though it was hard to parse, from what I can gather, it was clumsily trying to mash the Wolf and the Woodsman together in a way that didn’t make sense with the original intent of Riding Hood’s visit.

The reason this test matters is that even though many writers and those working in creative fields are concerned about the impact that LLMs like these may have on their industry, we’re still a long way off from AI being innovative in any sort of original way. The amalgamation of tired TV tropes GPT and Gemini paste together would still raise a few questions about story structure and character motivations for an eight-year-old, let alone discerning adults or film critics.

Coding With Google Gemini 1.0 Ultra

Gemini can also help generate, evaluate, and fix code in at least 20 programming languages. At the company’s annual I/O conference, one speaker noted that coding assistance is one of the most popular uses of Gemini. Like ChatGPT, it promises the ability to debug code, explain the issues, and generate small programs for you to copy. But it goes a step further than ChatGPT with the ability to export to Google Colab and, as of a mid-July update, Replit for Python.

However, Google warns against using the code without verifying it. “Yes, Gemini can help with coding and topics about coding, but Gemini is still experimental, and you are responsible for your use of code or coding explanations,” an FAQ reads. “So you should use discretion and carefully test and review all code for errors, bugs, and vulnerabilities before relying on it.” But unlike inaccurate text, code can be easier to fact-check. Run it and see if it works.

To test Gemini’s coding ability, I asked it to find the flaw in the following code, which is custom-designed to trick the compiler into thinking something of type A is actually of type B when it really isn’t.

“Hey, Gemini, can you help me figure out what’s wrong here?: pub fn transmute(obj: A) -> B { use std::hint::black_box; enum DummyEnum { A(Option

>), B(Option >), } #[inline(never)] fn transmute_inner(dummy: &mut DummyEnum, obj: A) -> B { let DummyEnum::B(ref_to_b) = dummy else { unreachable!() }; let ref_to_b = crate::lifetime_expansion::expand_mut(ref_to_b); *dummy = DummyEnum::A(Some(Box::new(obj))); black_box(dummy); *ref_to_b.take().unwrap() } transmute_inner(black_box(&mut DummyEnum::B(None)), obj)”

(Credit: Google/PCMag)

Our returned answer from Gemini was over 1,000 words long, so I won’t repeat it verbatim here. However, in the analysis, I found that while, on the whole, the answer was accurate, some portions of the code were phrased in such a way that the function wouldn’t perform the necessary checks to avoid the UB (Undefined Behavior). This was an explicit instruction that Gemini either didn’t understand or avoided entirely.

Meanwhile, ChatGPT 4.0 got the same treatment and returned a nearly 100% accurate answer, one that was simply missing some terminology for added context.

When using the web as a jumping point for research, I prefer Gemini over ChatGPT. Gemini seems to have nailed the research portion of local businesses far better than GPT could, which makes sense when you consider the available datasets.

Though this is only suspicion on my part, I’d imagine this accuracy and helpfulness gap comes from far fewer user-submitted reviews for local businesses on Microsoft Bing than Google.

(Credit: Copilot/PCMag)

As you can see above, the same business searched via Copilot returns 58 user reviews from TripAdvisor—and who knows if GPT can even read those—while Google has over 750 reviews that talk about everything from pricing to reservation policies and more.

On to the test. Here, I evaluate how well Gemini can contextualize and pull information from the websites of local businesses. In this case, I asked both Gemini and GPT to give me a list of local veterinarians who specialize in treating exotic animals. I have an injured iguana that needs AI’s help!

(Credit: Google/PCMag)

Right away, Google’s data presentation is an obvious upgrade over Bard and GPT 4.0, displaying maps along with helpful links and those aforementioned star ratings right in the window. On the GPT side, I got more detailed descriptions of each vet along with phone numbers and specific information about the iguana request.

Who knew Colorado had the largest private exotics-exclusive veterinary practice in America? (Credit: OpenAI/PCMag)

Both returned detailed results, with GPT just edging out Gemini in contextualization and helpfulness thanks to its inclusion of the Colorado Exotic Animal Hospital. In its own words, it recognized that the trip might be further (all the Gemini results stayed in Boulder); however, given its status, there might be a better chance of finding an iguana specialist at that location.

I went on to ask Bard and ChatGPT for recommendations on what to do in Boulder, Colorado, this weekend. In this testing, we’ve seen a direct swap of results between GPT and Gemini since PCMag’s first time benchmarking LLMs last year. When I last tested ChatGPT, it gave me a list of generic activities that occur annually on this same weekend, along with general recommendations that anyone with eyes in Boulder could figure out themselves, like going on a hike. This time, GPT gave highly specific events.

ChatGPT gives us the choice between Goat Yoga and Community Cuddles. (Credit: OpenAI/PCMag)

Meanwhile, last time, Bard was specific, and this time, Gemini returned options for a Film Festival (which happens on the same weekend every year), “live music at BOCO Cider,” which is a given for a Saturday night, and the farmers market. These suggestions felt not only bland and broad but also not really all that specific to Boulder.

If you want to get a sense of what Boulder’s really like, you’re not gonna do better than a Baby Goat Yoga class.

Travel Planning With Gemini 1.0 Ultra

Another helpful application of chatbots is travel planning and tourism. With so much contextualization on offer, you can specialize your requests of a chatbot in much the same way you’d have a conversation with a travel agent in person.

You can tell the chatbot your interests, your age, and even down to your level of hunger for adventures off the beaten path:

“Plan a 4-day trip to Tokyo for this summer for myself (36m) and my friend (33f). We both like cultural history, nightclubs, karaoke, technology, and anime and are willing to try any and all food. Our total budget for the four days, including all travel, is $10,000 apiece. Hook us up with some fun times!”

Here, the roles reversed once again, with Gemini giving highly specific, tailored results while GPT only gave vague answers that effectively took none of the context clues about our interests into account.

(Credit: OpenAI/PCMag)

Worse yet, when I asked GPT if it could look at hotels and flights for our preferred travel dates, the lack of current datasets shone through. It tried looking at a single hotel but couldn’t find prices. As for flights, I was told I was on my own and that “it’s advisable to check regularly for the latest deals on sites like KAYAK.” Thanks, GPT, I hadn’t thought of that one yet.

(Credit: Google/PCMag)

Meanwhile, Gemini not only gave me a full breakdown of prices, times, potential layovers, and the best airport to leave from, but it also directly embedded Google Flights data into the window.

(Credit: Google/PCMag)

Our hotel treatment was much the same, with embedded images, rates, and star ratings for some of the options in town that were best suited to my budget and stay length.

(Credit: Google/PCMag)

GPT 4.0? It advised that we “might like the Park Hyatt Tokyo since it was featured in Lost in Translation,” completely ignoring that the rooms are $700 a night. Not only was the single recommendation it managed to give far over budget, but it also seemed to disregard every context clue in the prompt. In planning your next vacation, it’s not even close: Gemini far outstripped GPT in every metric, including contextual understanding, data retrieval, data presentation, data accuracy, and overall helpfulness.

Performance Testing: Google Gemini 1.0 Ultra vs. ChatGPT 4.0

Using the chatbot benchmarking feature—yes, those exist now—on the website Chat.lmsys, I was able to directly test the performance of the most recent models of ChatGPT 4.0 and Google Gemini 1.0 Ultra head-to-head.

(Credit: Lmsys.org/PCMag)

Punching in the same code evaluation task, I asked Gemini to debug the above. I found that ChatGPT 4.0 returned its response first at 41 seconds with a total word count of 357, while Gemini 1.0 Ultra came in close behind at 46 seconds but with 459 words written. As I discussed above, ChatGPT’s coding answer was slightly more accurate, but only just. The differences in results primarily came down to some missteps in verbiage in one portion of Gemini’s result.

Google Takes a Huge Step Forward With Gemini Ultra 1.0

Gemini presents a clear vision of how Google thinks AI chatbots will look and act in the 21st century, and it’s a convincing one. A friendly interface and much larger token inputs (which let you put in far longer requests) make Gemini Ultra a pleasure to use on the front end. Just as important are the results it delivers, with accurate returns on the information you’re looking for and few misses on coding tests. Perhaps most critically—we have yet to see it violate copyright yet. All of this combines to make Gemini the most approachable and accurate of the services we’ve tested so far and the clear winner of our Editors’ Choice award for AI chatbots.

Emily Dreibelbis contributed to this review.

Pros

View

More

Cons

The Bottom Line

An attractive, accessible interface, coding smarts, and the ability to answer questions accurately make Gemini 1.0 Ultra the best AI chatbot we tested, especially for newbies.

Like What You’re Reading?

Sign up for Lab Report to get the latest reviews and top product advice delivered right to your inbox.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.