“Space,” according to Douglas Adams’ Hitchiker’s Guide to the Galaxy. “is big. Really Big. You just won’t believe how vastly, hugely, mind-bogglingly big it is. I mean, you may think it’s a long way down the road to the chemist’s, but that’s just peanuts to space.”

It turns out the same is true of cameras made to map space. You may think your full-frame camera is big but that’s nothing compared to the Legacy Survey of Space and Time (LSST) camera recently completed by the US Department of Energy’s SLAC National Accelerator Laboratory.

You may have seen it referred to as the size of a small car, but if anything that under-sells it. SLAC has essentially taken all the numbers you might recognize from photography, made each of them much, much bigger and then committed to a stitched time-lapse that it hopes will help to understand dark matter and dark energy.

|

|

Unlike many astro and space projects, LSST is recognizably a camera: it has a mechanical shutter, lenses and rear-mounting slot-in filters. Image: Chris Smith / SLAC National Accelerator Laboratory |

We got some more details from Andy Rasmussen, SLAC staff physicist and LSST Camera Integration and Testing Scientist.

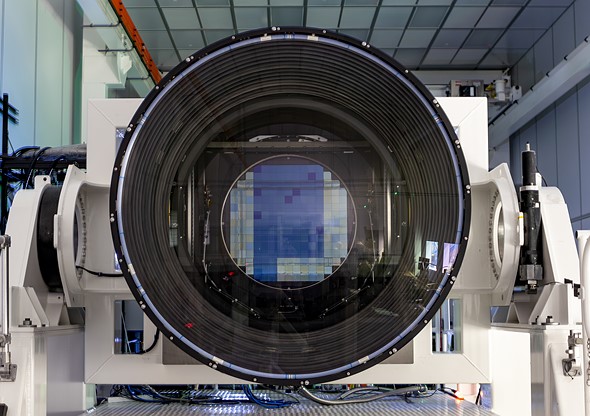

The LSST has a 3100 megapixel imaging surface. That surface is an array made up of 189 individual sensors, each of which is a 41 x 40mm 16.4MP CCD. Each of these sensors is larger than consumer-level medium format and when arranged together gives an imaging circle of 634mm (24.9″). That’s a crop factor of 0.068x for those playing along at home.

The individual pixels are 10μm in size, making each one nearly three times the area of the pixels in a 24MP full-frame sensor or seven times the size of those in a 26MP APS-C, 61MP full-frame or 100MP 44 x 33 medium format model.

To utilize this vast sensor, the LSST has a lens with three elements, one of which is recognized by Guinness World Records as “the world’s largest high-performance optical lens ever fabricated.” The front element is 1.57m in diameter (5.1 ft), with the other two a mere 1.2m (3.9 ft) and 72cm (2.4 ft) across. Behind this assembly can be slotted one of six 76cm (2.5 ft) filters that allow the camera to only capture specific wavelengths of light.

This camera is then mounted as part of a telescope with a 10m effective focal length, giving a 3.5 degree diagonal angle of view (around a 634mm equiv lens, in full-frame terms). Rasumussen puts this in context: “the outer diameter of the primary mirror is 8.4 meters. Divide the two, and this is why the system operates at f/1.2.”

That’s f/0.08 equivalent (or around eight stops more light if you can’t remember the multiples of the square root of two for numbers that small).

Each 16MP chip has sixteen readout channels leading to separate amplifiers, each of which is read-out at 500k px/sec, meaning that it takes two seconds. All 3216 channels are read-out simultaneously. The chips will be maintained at a temperature of -100°C (-148°F) to keep dark current down: Rasmussen quotes a figure of < 0.01 electrons / pixel / second.

But the camera won’t just be used to capture phenomenally high-resolution images. Instead it’ll be put to work shooting a timelapse series of stitched panos.

The camera, which will be installed at the Vera C. Rubin Observatory in Chile, will shoot a series of 30 second exposures (or pairs of 15 second exposures, depending on the noise consequences for the different wavelength bands) of around 1000 sections of the Southern sky. Each region will be photographed six times, typically using the same filter for all 1000 regions before switching to the next, over the course of about seven days.

This whole process will then be repeated around 1000 times over a ten-year period to create a timelapse that should allow scientists to better understand the expansion of the universe, as well as allowing the observation of events such as supernova explosions that occur during that time.

The sensors, created by Teledyne e2v, are sensitive to a very broad range of light “starting around 320nm where the atmosphere begins to be transparent,” says Rasmussen: “all the way in the near-infrared where silicon becomes transparent (1050nm),”

The sensors, developed in around 2014, are 100μm thick: a trade-off between enhanced sensitivity to red light and the charge spread that occurs as you use deeper and deeper pixels.

No battery life figures were given, but the cost is reported as being around $168M.