![]()

In 2016, Adobe developed a technology that was best described as “Photoshop for audio.” It would be able to change words in a voiceover simply by typing new words. Sounds scary, right? It was, and Adobe never released it — for good reason.

Called project VoCo, the technology was showcased during Adobe’s “Sneaks” event at its annual MAX conference and immediately turned heads. After MAX, the feedback regarding VoCo was so significant that Adobe felt the need to publish a full blog post about it to defend it.

“It’s a technology with several compelling use cases, making it easy for anybody to edit voiceover for videos or audio podcasts. And, if you have a 20-minute, high-quality sample of somebody speaking, you may even be able to add some new words and phrases in their voice without having to call them back for additional recordings,” Mark Randall wrote, focusing on the positives of the technology.

“That saves time and money for busy audio editors producing radio commercials, podcasts, audiobooks, voice-over narration and myriad other applications. But in some cases, it could also make it easier to create a realistic-sounding edit of somebody speaking a sequence of words they never actually said.”

Randall would go on to argue that new technology like this, while it might be controversial or scary, has many positive effects, too. While admitting that “unscrupulous people might twist [it] for nefarious purposes,” he goes on to defend developing the technology because of the good it can do.

“The tools exist (Adobe Audition is one of them) to cut and paste speech syllables into words, and to pitch-shift and blend the speech so it sounds natural,” he argued.

“Project VoCo doesn’t change what’s possible, it just makes it easier and more accessible to more people. Like the printing press and photograph before it, that has the potential to democratize audio editing, which in turn challenges our cultural expectations, and sparks conversation about the authenticity of what we hear. That’s a great thing.”

While Adobe may have taken this stance initially, VoCo never saw the light of day. Clearly, Adobe realized that the risk of what VoCo could do would outweigh the benefits. Or, perhaps more likely, Adobe’s legal department just couldn’t stomach the idea of attempting to defend the company when the technology was used to put unseemly words into the mouth of a world leader.

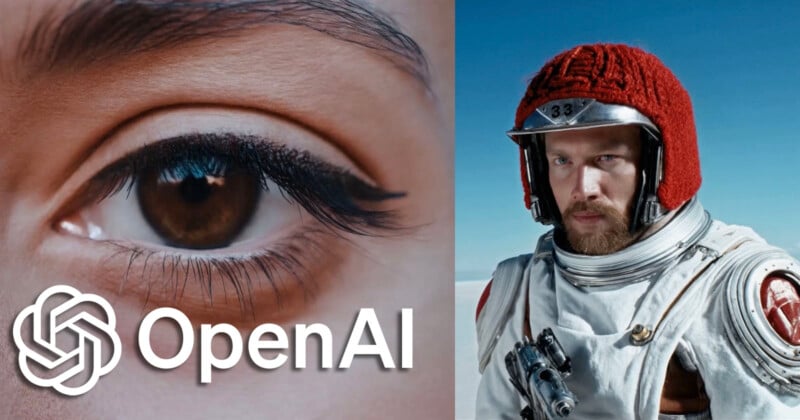

If VoCo was a Risk, What the Heck is Sora?

I vividly remember sitting in the crowd watching the VoCo presentation and thinking, “This cannot be put out there. Any good it could do will be vastly outweighed by the damage it can cause.”

I never thought I would be pointing to Adobe as a shining example of ethics and morality, but here we are. Adobe agreed and VoCo never saw the light of day.

But the folks at OpenAI don’t seem to be driven by the same morality, or at least aren’t afraid of potential legal repercussions. Maybe it’s because the company thinks it can head off any of these issues through coding, but I find myself wondering what ever happened to ethical software development?

I find it hard to believe that at no point in the creation of Sora — a brand new text-to-video artificial intelligence software — someone at OpenAI didn’t raise their hand with concerns about what ramifications this technology would have. Despite this, the company pushed forward, making the active choice to ignore those concerns.

Look, Sora is fascinating. The capabilities of this software which is just in its infancy are already astounding, yet I can’t help but be filled with a sense of dread and foreboding.

If VoCo was deemed too much of a risk, how is Sora not? Just because you can make something doesn’t mean you should.

Back in 2016, VoCo felt like a lot. It was way more advanced than anything we had ever seen before. It was shocking. But now in 2024, AI has seeped into so many parts of daily life and now after more than a year of AI image generators, perhaps people aren’t really realizing what’s happening. We are a frog that doesn’t know it’s being boiled.