The Ray-Ban Meta Smart Glasses II, which Chris Niccolls described as impressive but ultimately a “luxury solution in search of a problem” in his review for PetaPixel, look to have found a problem to solve thanks to some fancy new AI technology.

By rolling out multi-modal Meta AI with Vision to the Ray-Ban Meta smart glasses collection — which now includes some brand-new styles — Meta believes it has made the Ray-Ban shades significantly more helpful.

The glasses, at least for wearers in the United States and Canada, can now be controlled using voice commands, offer real-time information to the wearer, and answer questions. That isn’t new in itself, but what is new is the ability for users to do multiple AI tasks at once. Combining visual and audio processing greatly affects how users interact with the world using their smart glasses.

“Say you’re traveling and trying to read a menu in French. Your smart glasses can use their built-in camera and Meta AI to translate the text for you, giving you the info you need without having to pull out your phone or stare at a screen,” Meta explains.

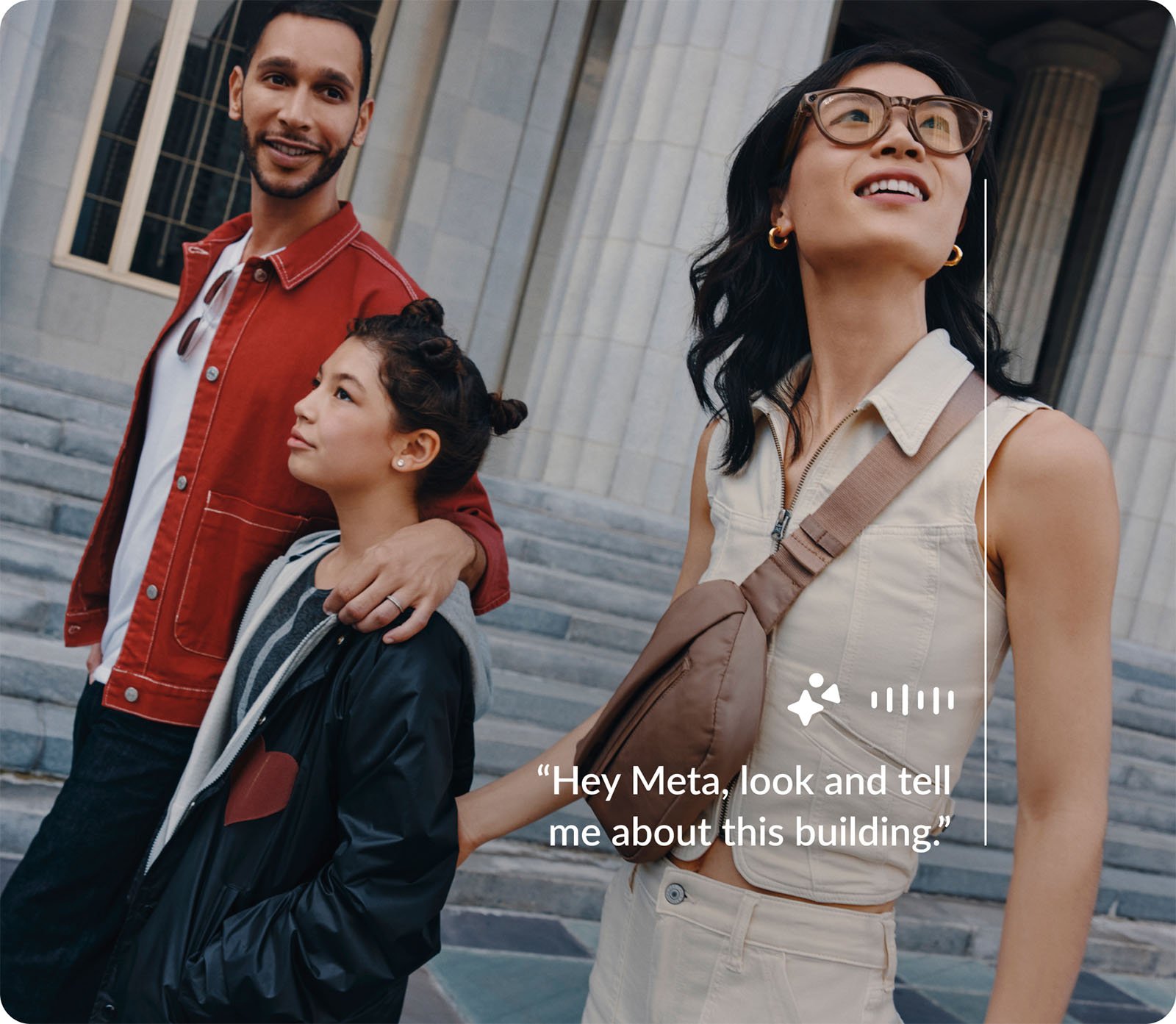

Users can look at a building and ask Meta AI, which can “see” the building thanks to the Smart Glasses’ built-in camera, about its history. A wearer can pop open their refrigerator and let the AI see what they’ve got for food and suggest possible meals.

While not unique to Ray-Ban Meta smart glasses, it is a nifty and unobtrusive use of AI-based visual processing.

The Humane AI pin, which offers some of this same technology in a wearable form factor, has been much derided, but as Victoria Song writes for The Verge, “It’s a bit premature to completely write this class of gadget off.”

The Ray-Ban Smart Glasses are, at the very least, proof that the concept of wearable AI-powered devices isn’t lost. Meta’s AI can’t do everything yet — which is probably for the best — but it seems it can do reasonably valuable things.

The glasses’ camera can’t zoom, which limits its utility for certain AI-aided identification tasks. However, the notion that someone, without pulling out their phone or, god forbid, doing research, can just look at something, mutter a few words, and learn something new is neat.

Of course, a user still needs a phone to use Meta AI. The phone does the heavy lifting, even if tucked away in a pocket. Nonetheless, Ray-Ban and Meta are onto something here.

“To me, it’s the mix of a familiar form factor and decent execution that makes the AI workable on these glasses,” Song writes.

What separates the Ray-Ban Smart Glasses from something like the Humane AI pin is that the Smart Glasses aren’t AI glasses. They’re glasses that do other things, and now they also have relatively sophisticated AI features.

Perhaps one day, AI technology and vision processing will reach a point where a person with significant visual impairment can be told everything they’re “looking” at, thanks to the power of wearable AI. Tech isn’t there yet, but it could get there, and that would change the world.

For now, people can ask their glasses to identify plants and animals, teach them something about history, translate some text, or generate pithy captions for photos. Gotta start somewhere.