On March 22, Microsoft launched one of the smallest AI models ever: Phi-3 Mini.

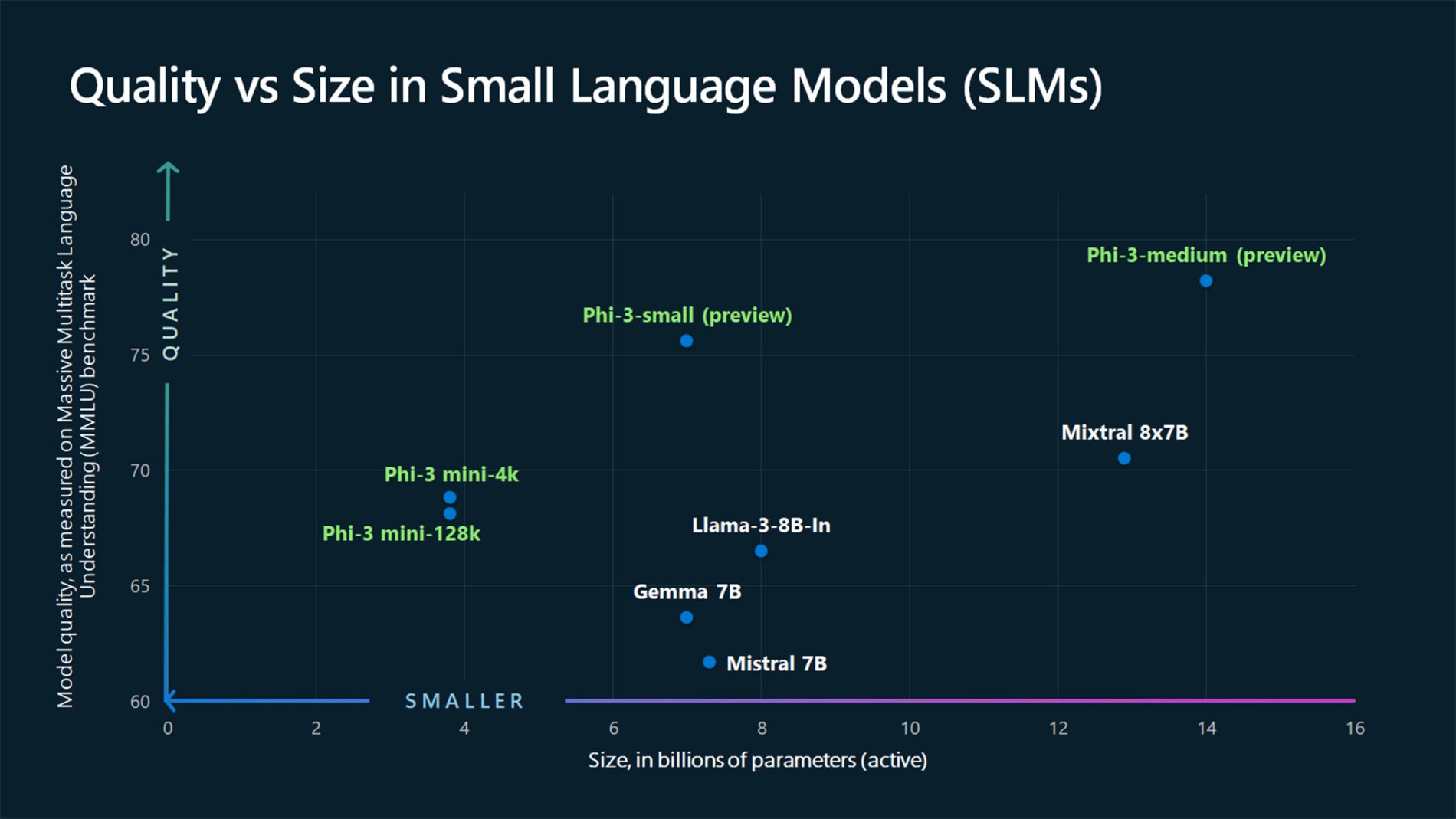

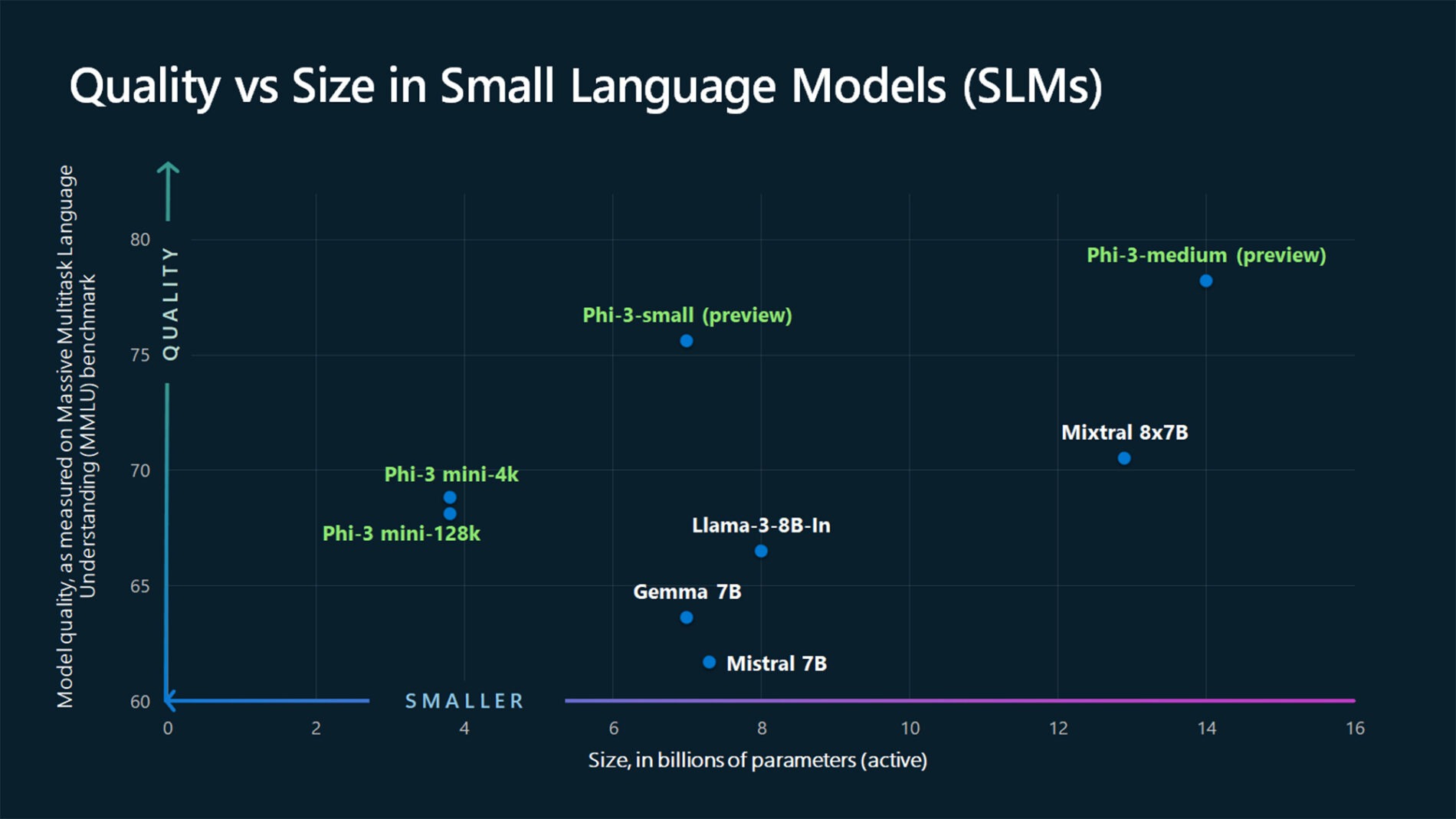

According to a technical report (via Windows Central), Microsoft says Phi-3 Mini rivals the performance of larger models like Mixtral 8x7B and GPT-3.5, despite being small enough to run on a phone. This means your next phone might be able to run more complex AI tasks locally rather than requiring the web and sending your data to a cloud server.

Other companies have launched small AI models as well, but what makes Microsoft’s Phi-3 Mini model truly unique is how it was trained — with children’s stories.

Microsoft’s Phi-3 Mini model was taught using children’s books

Phi-3 Mini is a 3.8 billion parameter language model that was trained on 3.3 trillion tokens, or units of data. Much of that data comes from a Microsoft-designed “curriculum” inspired by how children learn from bedtime stories, using simple words and basic sentence structures to tackle larger topics.

VP of Microsoft’s Azure AI Platform, Eric Boyd, explained to The Verge that “There aren’t enough children’s books out there, so we took a list of more than 3,000 words and asked an LLM to make ‘children’s books’ to teach Phi.”

Despite being trained on a considerably smaller amount of data compared to many LLMs, Phi-3 Mini is a capable little bugger. It can’t compete with GPT-4 or other LLMs that are often trained using a large portion of the internet, but Microsoft’s Phi-3 Mini model is still an impressive feat.

Microsoft isn’t the first to come out with a lightweight AI model — we’ve seen small models from Google, Anthropic, and Meta — but it is one of the first small AI models with this level of performance.

Small AI models certainly aren’t going to replace LLMs, but with a growing demand for more private, on-device AI features, they will have a future in smartphones.

Apple’s iOS 18 is rumored to have a strong AI focus and unsurprisingly rumors suggest that Apple is trying to keep many AI features on-device to maintain user privacy. Small AI models are precisely what is needed to make this happen. We’ll have to wait until WWDC 2024, or perhaps even the iPhone 16 launch, to find out if that’s what Apple is using, but expect to hear plenty more about small AI models and LLMs over the coming year.

Last year felt like the year of AI, but it was just the tip of the iceberg.