A number of media outlets have again gotten caught using fake AI images as real photos, but this time blatantly.

Adobe’s stock image library recently had a number of images hosted on it that were for sale and showed AI-rendered recreations of currently ongoing the Israel- Hamas conflict.

After finding these, presumably through a search of Adobe stock photos, a number of news publishers then bought the images and used them as if they were real.

Adobe itself didn’t render these images. Instead, the software giant, creator of photographer tools like Lightroom and Photoshop (and more recently the AI image generating and editing system Firefly) lets users sell their own work through its platform.

These can be photos taken by actual cameras but also images that users create right through Adobe’s own machine-learning tools via the Adobe Stock Library.

For every image sold by a photographer or artist, Adobe gives them 33% of the revenue while keeping the rest. How much an artist or photographer earns depends on the price of their image once its licensed and downloaded by a buyer.

Well with this arrangement, numerous users have added numerous AI-rendered images to the Adobe Stock Library hoping to make some relatively easy money.

In many cases, the images they create are of controversial subjects that would otherwise be difficult or expensive to capture with a camera; a good example of this obviously being the Hamas-Israel conflict.

Now, though all such images were and are labeled by Adobe as “generated by AI”, apparently some news outlets got rid of that little detail after buying them. In other words, they passed the entirely artificial visuals of specific events that didn’t happen as real war photos.

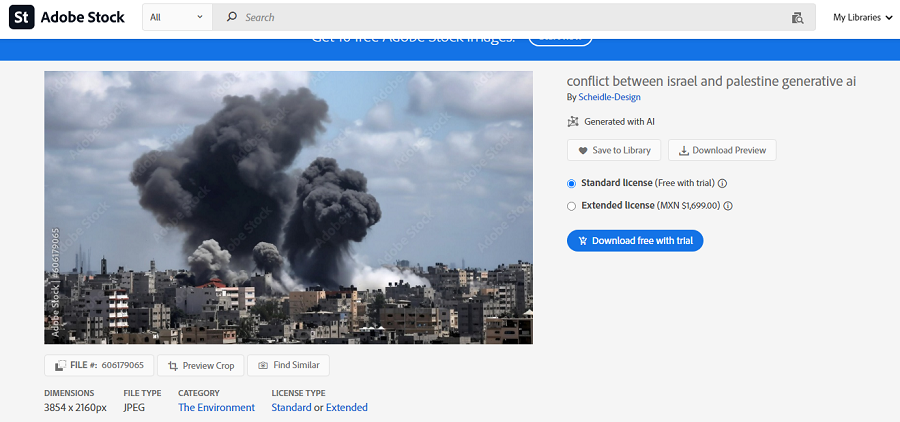

One example of these from Adobe is an image called “Conflict between Israel and Palestine generative AI,” which shows billowing dark clouds of smoke coming from ruined buildings.

It was bought and shared by an assortment of internet news outlets as if real, despite being fake and clearly labeled as such by Adobe.

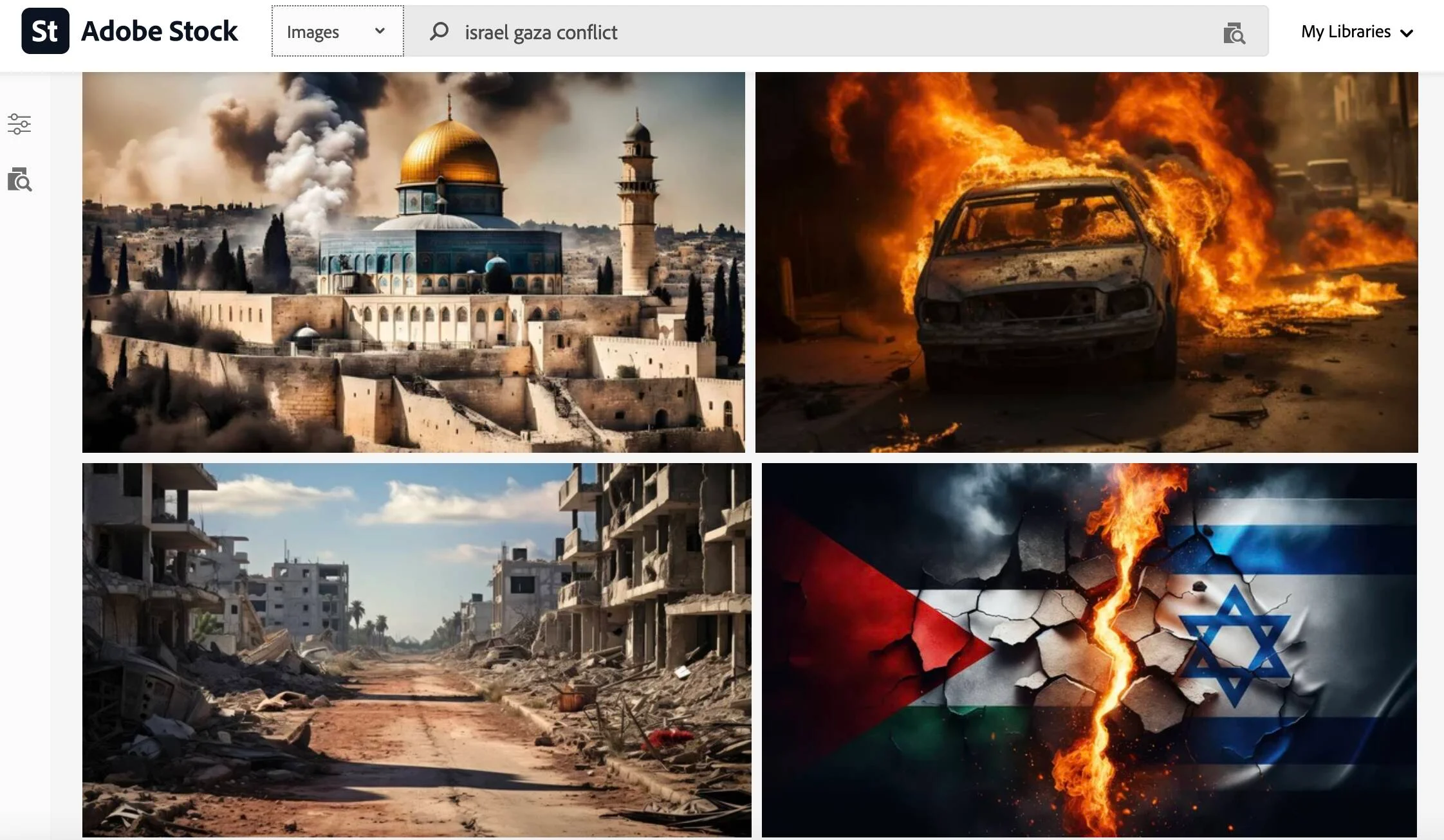

Searching through Adobe’s stock library shows more results in the same vein, with user-generated AI-renderings of airstrikes, ruined buildings and burning vehicles in what are presented as renderings of the streets of Gaza.

And to be fair, the quality of the renderings is pretty great. To a casual reader browsing a news article without thinking about image details, these renderings could deceive. The news outlet reporters have no such excuse though.

Let’s hope that this trend among news sources doesn’t become ever more pervasive in the coming years.

That users and image platforms cooperate to artificially create images of all sorts of subjects is to be expected. Those subjects also shouldn’t be blocked by ever-shifting ideas of might offend or not.

After all, war is a part of the human experience and if AI image rendering for creative/artistic purposes is going to be a thing, it should also be open for creating depictions of our world’s uglier facets and moments.

However, news sites at least supposedly exist to cover fundamental reality even if they apply their own narrative slant. Presenting completely artificial visuals as real, well that deviates far from just a tiny bit of dishonesty.

AI image generation has advanced enormously in the last couple of years, as almost any photographer (sometimes painfully) has realized.

For content posted online as factual, this trend has created enormous deepfakes problems that are only growing in scope.

Several major content creators, tech companies and news outlets have banded together to create Content Credentials under something called the Content Authenticity Initiative to use metadata for the sake of quickly identifying image sources, be they real or AI-based.

Adobe, along with organizations like Microsoft, the BBC and the NY Times, is part of this very same initiative.

In fairness to Adobe in this particular case, the company definitively labeled the images in its library as what they are. It was the third-party news sites that decided to be flexible on transparency.

As Adobe explained recently to the site PetaPixel, “Adobe Stock is a marketplace that requires all generative AI content to be labeled as such when submitted for licensing,”

It added,

“These specific images were labeled as generative AI when they were both submitted and made available for license in line with these requirements. We believe it’s important for customers to know what Adobe Stock images were created using generative AI tools.

Adobe is committed to fighting misinformation, and via the Content Authenticity Initiative, we are working with publishers, camera manufacturers and other stakeholders to advance the adoption of Content Credentials, including in our own products. Content Credentials allows people to see vital context about how a piece of digital content was captured, created or edited including whether AI tools were used in the creation or editing of the digital content.”

The Pandora’s Box of AI-rendered content being used with overt dishonesty is already open, but the content itself isn’t really to blame, or its creators as long as they label it honestly.

Lazy and dishonest news websites are an entirely different story, particularly when they knowingly use fakery in their “reporting”.