Intel announced this week that it can now run more than 500 local AI models on its new Intel Core Ultra processors, in what the semiconductor giant called a “significant milestone.”

Intel credited, among other factors, its investment in client AI and AI tools, including the OpenVINO toolkit —an open-source toolkit for deploying deep-learning AI—for reaching the figure.

With 500 models able to run across the CPU, GPU, and NPU, Intel didn’t hesitate to call its Core Ultra chips “the industry’s premier AI PC processor available in the market.”

Right now, it’s not clear exactly how many optimized AI models Qualcomm’s Snapdragon X Elite chip or AMD ‘s new AI chips are compatible with, but if it’s anywhere close to 500, we’ll surely hear about it in the next few weeks.

For now, here’s why 500 optimized AI models running on an Intel Core Ultra chip should even matter to you when buying a new laptop.

More AI models = a better laptop experience

The 500 models on Intel’s Core Ultra chips span more than 20 categories of AI, from large language models like ChatGPT and Gemini to diffusion, super-resolution, object detection, image classification/segmentation, and computer vision models.

As Intel notes in its announcement on Tuesday, “the more AI models there are, the more AI PC features are enabled.”

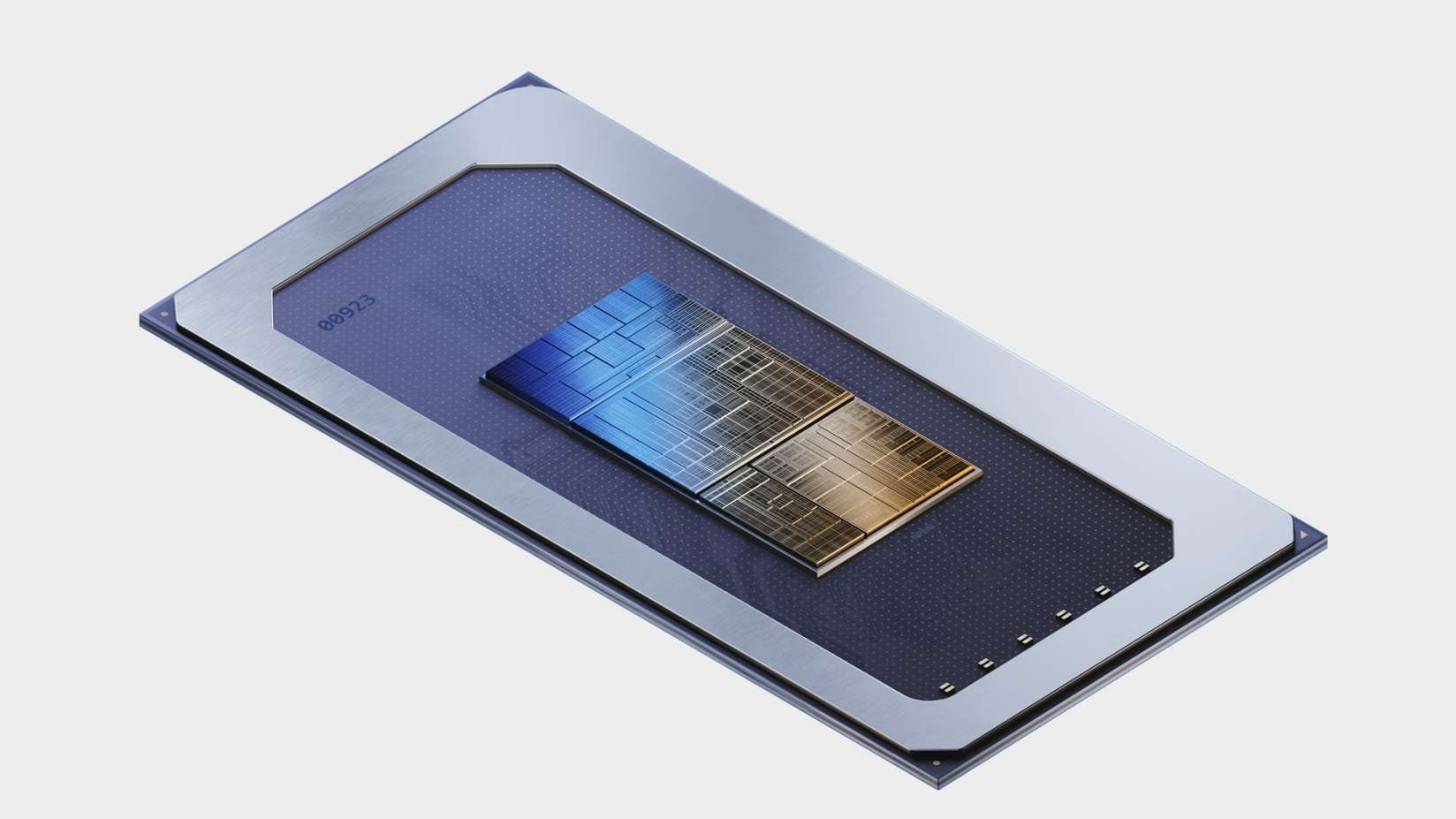

(Image credit: Intel)

Intel explains that “there is a direct link between the number of enabled/optimized models and the breadth of user-facing AI features that can be brought to market.”

Put plainly, the more AI models your laptop’s processor runs, the better its AI-enhanced software features will be.

A few of the most commonly used AI features include text generation, text paraphrasing or editing, summarization, image generation, and object removal in images.

With more AI models to build from, developers are able to create new AI PC features that we haven’t yet experienced and improve the ones we already have.

Even with its 500 AI models, however, the Intel Core Ultra probably still can’t match Nvidia’s definition of a “premium AI PC” with a GPU.