The Legend of Zelda: Tears of the Kingdom lead sound engineer Junya Osada began his portion of Nintendo’s 2024 Game Developers Conference panel with a joke many audio engineers in game development will relate to.

“Aren’t you forgetting something?” he asked lead physics programmer Takahiro Takayama after he’d concluded his presentation of the physics system driving Tears of the Kingdom. “You forgot the sound team.”

His deadpan tone prompted laughter, then a rolling applause from the crowd at GDC—a lively start to what would become a fascinatingly dense breakdown of the game’s realistic audio engineering.

What Osada laid out in the next 20 or so minutes was as eye-poppingly complex as Takayama’s physics presentation. Osada has been making sound effects for Nintendo since 2005’s Animal Crossing: Wild World for the Nintendo DS.

In a follow-up interview with Game Developer, Osada explained that on both those earlier titles and The Legend of Zelda: Breath of the Wild, he relied on the conventional audio engineering practice of creating a library of sound effects and blending them into gameplay, occasionally creating interactive systems that allowed the soundtrack to respond to specific player activities.

Once the mandate came down that Tears of the Kingdom would be a game where players could meld objects together in a litany of combinations within the entire breadth of the game world, he knew an intricate rules-based system would need creating.

But “intricate” doesn’t come close to capturing what his team accomplished. To fully grasp how robust Tears of the Kingdom’s sound system is, you need to know that late into development, director Hidemaro Fujibayashi would incredulously ask Osada if he and his colleagues had successfully created “a physics system for sound.”

Osada emphasized in our interview that his team didn’t set out to create something so complex—rather that it was a “byproduct” of all the efficiencies constructed to manage what would have been a gargantuan sound library if created by hand.

If you want to understand why Osada’s creation is so wonderous, you’re going to need to dig into the nitty gritty technical details of distance attenuation curves, air absorption, and calculating distance using the same voxels level designers were using to shape the game world.

“All sounds really must be controlled by the same rules”

At the core of Tears of the Kingdom‘s sound system is a fairly simple philosophy: Osada and his colleagues wanted to depict how sound spreads and naturally echoes in every corner of Hyrule.

That’s a taller order than what was required in Breath of the Wild. Tears of the Kingdom contains more varieties of environments than its predecessor. The snowy mountains, open plains, lush waterways, dense forests, and harsh desert (just to name a few), are joined by new dense cave systems, the cavernous world of The Depths, the temples in the skies above, and a deep canyon that cuts through what was once an open plain of Hyrule.

Imagine trying to create assets for a simple arrow shot in all of those environments. The impact of the arrow must account for whatever surface it contacts. The sound effect must sound differently when fired up close, at a medium distance, and far away. The arrow impact must not be drowned out by other sounds. The arrow impact must also not overwhelm the natural noises that may be present. The arrow impact may need to echo, but the echo will carry differently in a cave versus The Depths versus–are you starting to get what Osada was dealing with here?

And that’s just arrows. Throw in swords. The Hobgoblin screams. The Koroks wailing about needing to find their friend. Then think about what was expected to be a near-infinite possible of item combinations brought together by the Fuse system and maybe you’ll understand why Osada and his colleagues went “back to the basics” of what it meant to design sound in a three-dimensional space.

The fundamental principles were this: sounds from far away need to sound quieter, sounds that are up close need to sound louder. Sounds to the player’s right should come from the right speaker, sounds to the left should come from the left speaker. Sound should echo in caves, be dulled in absorbing material like snow, and so on, and so forth.

The general process in sound design is to mimic these effects without fully simulating them. Common solutions to the near/far quandaries involve creating a distance attenuation curve for a sound effect that’s calculated on a horizontal access relative to the player’s position, or preparing sound effects for short and long distances then crossfading them as they broach from one to the other.

But lowering the volume isn’t enough for a game as vast as Tears of the Kingdom. Players need to be able to tell what direction sound is coming from and how far away it is depending on the environment. So all sound everywhere must abide by the same rules.

You know, like in real life.

Starting with the fundamentals of sound

“It’s a well-known property of sound that when distance is doubled, volume is halved,” Osada explained in his talk. Once the game knows where the in-point source is, the sound pressure per unit area attenuates the distance and can be determined with a calculation.

In layperson’s terms, loud sounds can be heard at a distance, and quiet ones can’t. If a sound needs to be heard from far away, it needs to be an initially loud sound that quiets as it reaches the player.

In theory the engineers could have relied on simple logarithmic calculations that could capture the halving of sound with the doubling of distance. Except sound doesn’t work that way equally in all environments.

If it did, the 100db crow of a rooster would be able to reach 100,000 meters (about 62 miles) before it began to attenuate. That’s the distance from Tokyo to Mount Fuji, or from Sacramento to San Francisco. Osada joked that everyone in the room shouldn’t expect to hear a rooster crowing all the way over in the capitol of California.

Sound doesn’t reach that far because of “excess attenuation,” or “air absorption.” As sound passes through the air, it attenuates faster than if it were in a vacuum. Other substances in the air (like say, California’s air pollution) could further impact that rooster’s cry.

Higher-frequency sounds are subject to stronger attenuation. The decay of air absorption decays in proportion to distance, and it can be modeled as a curve on a logarithmic graph.

To capture that phenomenon, Osada and his colleagues used filters to simulate stronger attenuation for higher frequencies. These filters take excess attenuation into account and make adjustments to find the right balance. The sound system also checks for what filters need to be applied based on what environmental characteristics and the distance of the sound from the player.

He demonstrated these filters with video of player character Link firing arrows at trees placed at different distances. If you want to see what he was describing for yourself, you can boot up the game and head for the grove of your choice (try not to torture any Koroks along the way).

Environments also shape how sound spreads

Link’s journey above and below the world of Hyrule sends him through a wide variety of different environments, each with spaces that subtly alter the flow of sound. Think about the enclosed spaces you can find in the various structures floating in the sky, or how a claustrophobic tunnel might open up into a massive cave.

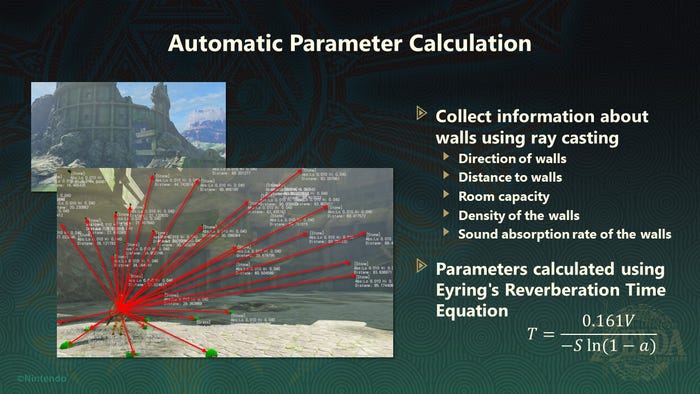

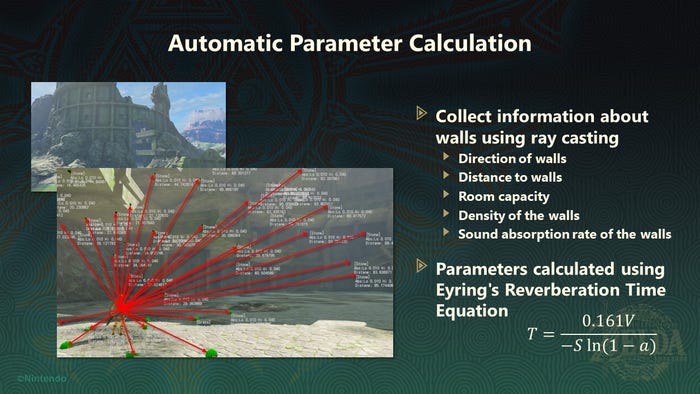

In the simplest of these environments (like the Shrines where the material of the surroundings and distance from the player is relatively consistent), it’s possible to easily calculate reverb parameters based on respective room capacity. That calculation is made by gauging the direction and distance of nearby walls, as well as the material they’re made of.

To calculate those audio needs on more complicated terrain, the game checks the player’s position against the voxels under the game world that shape the terrain. Those voxels can store a mini-library of information that includes their coordinates, whether they’re indoors or outdoors, whether they’re near a water’s surface or are part of the water itself.

Image via Nintendo.

There’s also information vital to the level design team contained in there, as the voxels identify whether the Ascend ability can be used to propel Link from one elevation to the one above it.

At the thousand-foot-view, this means every sound—every arrow hit, the collision of any object against another, the in-game music played on various instruments—follows the same rules.

The sound designers regularly heard sound combinations they had no memory of creating

As this system evolved and blended with other systems like in-game instruments, Fuse system creations, and the physics system described by Takayama, an organic soundscape mimicking the natural world began to take shape.

Osada showed a video of a tuba player playing their instrument in a rocky canyon. Link moved around the musician, and the sound naturally transitioned to match his position and elevation, the echo off the high, stony walls becoming more obvious the further away (and higher up) he travelled.

The philosophy of every object in the game having its own unique sound properties extends into the player-made creations of the Fuse system and hand-crafted objects like horse-drawn wagons. If you listen to one of those wagons trundling along, it won’t sound otherworldly or exceptional. It just sounds like a loud wooden object bumping and rolling with the chains hooking it to a horse’s harness.

But there is no dedicated “wagon sound.” It’s just all the effects of the wheels rolling, the wooden frame rumbling with vehicle suspension, and the chain all playing in synchronicity.

Image via Nintendo.

The rigidity of the different bodies calculated by the physics system also shapes the flow of sound. The size of the object and its material can be factored into the calculation, creating different noises for objects of similar sizes but different materials, or similar materials but different sizes.

Most of Osada’s talk covered the naturalistic sounds that simulated the real world. But Tears of the Kingdom also features sounds natural to the fantasy world of Hyrule and gameplay-driven audio effects like the chime of picking up a Rupee.

Those sounds were created by leveraging classic sound engineering processes, like combining instrumentation or layering other noises to create otherworldly effects. This process let them create sounds that “gave an expression that matched the image,” Osada explained. There was still a balance to be struck between expanding into rules-based sound engineering and classic “manual” sound effect creation.

All sounds, even ones that seem like they could be independently authored, work like this. The sound of massive Flux Construct enemies, a metal hook sliding down a rail, and the creaking of suspension bridges all are generated by sound files assigned to each object, which are then modified based on their distance to the player and their interaction with other objects.

How far does this process go? In our chat with Osada, he explained these principles even applied to game menus and other parts of the user interface. Open up the game menu and its sound is being calculated as if it is closer to the screen itself—nearer to the player—than it is to Link.

The system grew so dense that Osada’s colleagues in the sound engineering department were “surprised” by the high quality of sounds the system produced.

They’d regularly hear sounds they had no memory of creating.

This phenomenon would be what drove Fujibayashi to dub it as “a physics system for sound.”

How did this system change Nintendo’s development process?

In our post-panel conversation, Osada compared the process to how his colleagues tasked with building 3D assets for Tears of the Kingdom began their process by using Maya to create simple versions of an asset, before letting that initial version lead them toward the final in-game iteration.

That process mirrored how the ‘physics system for sound’ was born. “In this way, we were able to [create] a functioning sound system that works organically,” said Osada, noting that he’s looking forward to seeing how this process can be used on games in the future.

His regular mention of collaborating with other departments and observing how they worked made it seem as though the sound engineering team were able to work more closely with their peers on Tears of the Kingdom.

Osada acknowledged that was the case. “Up until now, sound implementation in the pipeline takes place in the second half of game development,” he said. “That sometimes limits the ways our team was able to implement ideas and have influence on the game.

“A system like this lets the team work closely [with others] to closely implement sound…and have conversations with other disciplines.”

He seems to suggest that a studio can’t just create their own rules-based systems in a room that only contains audio engineers. Brilliant, immersive audio only comes to life when all disciplines are regularly partnering up to evaluate each other’s work.

Tears of the Kingdom‘s sound system feels like a revelation

There’s something profound in what Osada described. By pursuing ever-efficient processes to blend together the many sound effects of Tears of the Kingdom, he and his colleagues accidentally made a tool so powerful it brushes up how sound organically occurs in the natural world.

But the technical wizardry isn’t all that makes this such a groundbreaking piece of technology. Osada’s explanation that this allowed the sound team to work with their colleagues earlier in the development process, and that it gave them deeper insights into how their colleagues worked, echoes what Takayama described about how the game’s physics system was only made possible by closely collaborating with other departments.

Technical director Takuhiro Dohta echoed this sentiment in his closing remarks at the GDC panel, explaining that the sound and physics departments had “things in commons when it came to how they evolved working on this game.”

Both teams created rules-based system that allowed for greater creative freedom. Both systems added layers of complexity to interactions that occur within the game. And both systems enabled new “discoveries”–not just for players, but for the developers as well.

No single piece of Tears of the Kingdom exists without the others. Developers wanting to recreate its “multiplicative” magic don’t need to create a fully responsive game world. They should aspire to see what happens when they bring teams together to collaborate on systems-driven gameplay.

Game Developer and Game Developers Conference are sibling organizations under Informa Tech.