Apple Vision Pro: Specifications

| Apple | |

|---|---|

| Device | |

| Image |  |

| Weight |

|

| Display and optics |

|

| Processor |

|

| Storage | |

| Cameras / Tracking |

|

| Battery and runtime | |

| Connectivity |

|

| Sound |

|

| Other |

|

| Input | |

| Operating System | |

| In the box |

|

| Announcement date | Price |

|

Apple Vision Pro at a glance

After years of speculation, rumors, leaks, and anticipation, the Apple Vision Pro was finally released on June 5, 2023. Is this the device we were all waiting for? Perhaps not, but it’s definitely one of the most advanced mixed-reality headsets announced to date.

Sporting a futuristic and more flexible design, Apple leveraged its vast experience in building smartphones, smartwatches, and headphones to deliver what the company is calling a ‘spatial computing device.’ The Vision Pro doesn’t require controllers, which means you can interact with both virtual and augmented reality using only your hands, eyes, and voice. Believe me, this calls for cutting-edge technology.

Moreover, as a software company, Apple understands the importance of user experience. Drawing upon its extensive background in software development, Apple designed the visionOS not merely to mirror your computer screen, but to deliver a unique experience on a brand-new platform. This should make all the difference.

Another positive aspect of the device is the emphasis on privacy and security, a legacy of Apple’s iPhone and Watch Series. In stark contrast to Meta—the leading company in the virtual reality industry—Apple has proven its commitment to keeping what is private even more private. All image and sound processing occurs on the device itself.

Furthermore, the Vision Pro offers a more social experience through its advanced passthrough technology—which Apple calls EyeSight. This allows others to see and understand when the wearer is engaged in an immersive experience or even attentive to their surroundings.

However, as previously mentioned, this might not be the device we were all eagerly anticipating. While Apple has not disclosed the official dimensions or weight of the Vision Pro, those who have tested it confirm that it is not lightweight. This means that even though the battery is not built into the headset, but connected via a sort of MagSafe cable, spending extended periods with the device on one’s head may cause discomfort, potentially limiting the mixed reality experience.

Also, advanced technology often translates to higher costs. In other words, if this is the most advanced headset yet announced, it’s also likely the most expensive one. Consequently, only a select few will be able to afford this technology, potentially making this industry even less accessible to the masses. Well, at least until a Vision SE version hits the shelves.

In this Apple Vision Pro guide, we will share all the official features and hardware specifications with you, and also provide an overview of the headsets that serve as the best alternatives to Apple’s device. Let’s dive in.

Apple Vision Pro main features

Similar to iOS 17, iPadOS 17, and macOS Sonoma, Apple categorizes its software features into several main categories: Productivity, Entertainment, Social, Health, and others. This structure is also reflected in visionOS. Many of the main features of Vision Pro fulfill these platform pillars as well.

In this section, I’d like to share with you some of the possibilities we’ve uncovered with the Apple Vision Pro software. Throughout, I’ll be discussing this in the context of ‘what’s the device for.’ Now, let’s take a look at some of the main features of Apple Vision Pro:

Productivity

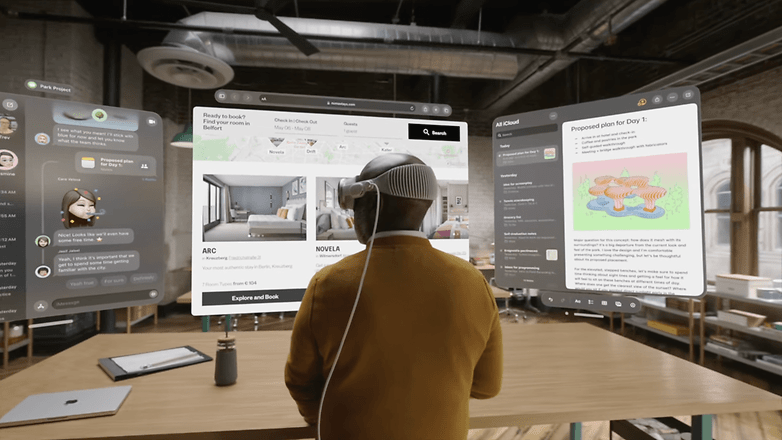

Apple describes its Vision Pro headset as a spatial computing device. In other words, it’s designed to bring a suite of productivity software and services into our reality, displaying them right before our eyes to augment our visualization of the dashboard. Yes, it’s giving off Minority Report vibes!

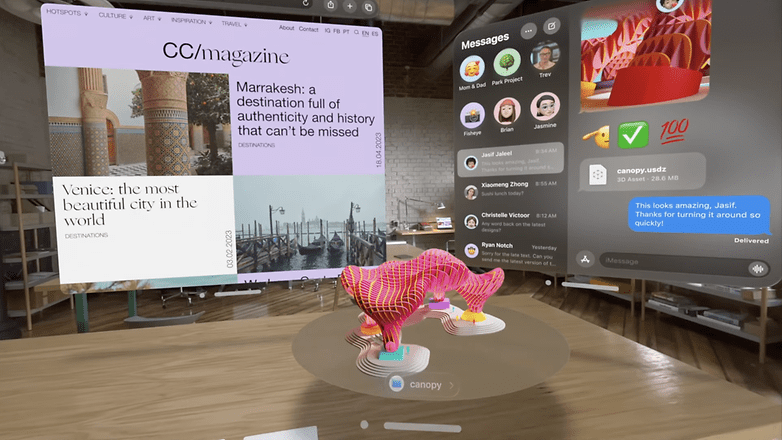

So far, we only have the demo version created by Apple to rely on, but visionOS reminds me a lot of iPadOS and, at some levels, macOS—it’s almost like a blend of these two software systems. It offers multiple-screen navigation and full integration with Apple’s ecosystem. Just to give you an idea of how seamless it can be, you can connect a MacBook to the Vision Pro simply by looking at it.

There isn’t much information available about the capabilities of multiple Apple ID profiles or screen sharing with the Vision Pro. Therefore, I’m basing my expectations on the experience offered by apps like Freeform, Google Meet, and Microsoft Teams. I envision office team meetings happening in real time, with all participants taking advantage of the mixed reality experience offered by the Vision Pro.

However, it’s still unclear whether people using alternative headsets could participate in these meetings and view the same opened projects. The concept has great potential for workplace solutions, but without such integration, it could significantly undermine the overall concept.

Entertainment

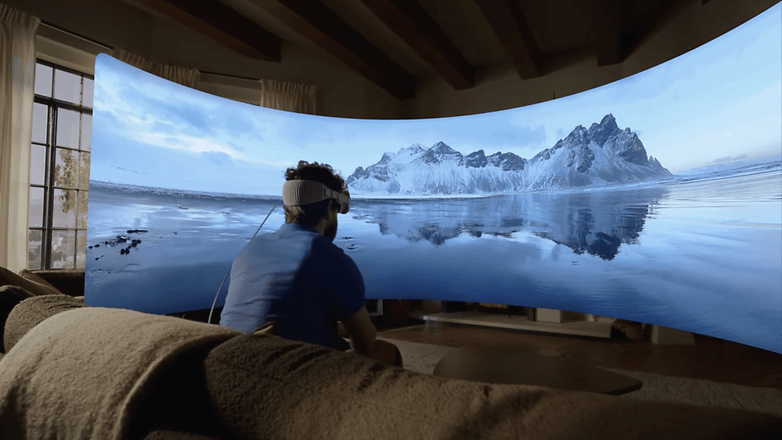

Fun is arguably one of the most anticipated features of a virtual reality headset. Apple, having already made a foray into the streaming industry and even garnering an Oscar for movie production, understands what, when, and how you like to watch content.

The Apple Vision Pro aims to elevate your streaming experience to a more immersive level than what’s offered by your iPhone, iPad, MacBook, or Smart TV. The device promises to enhance not just movies or TV series, but also sports, news, and all other types of content you typically consume on your devices. However, Apple Vision Pro won’t get Netflix and YouTube apps at launch.

Combined with Spatial Audio technology, it’s certainly true that this device can provide a movie theater experience, particularly when considering the scalability of the screen: it can project up to a 100-foot-wide screen. Essentially, this offers an IMAX-like viewing experience.

When it comes to gaming, the possibilities are also vast due to the screen’s scalability. Although Apple doesn’t ship the device with controllers, it is compatible with third-party gaming devices. However, according to Apple, the future of gaming on Vision Pro is now in the hands of developers. So let’s see which game titles will be available for VisionOS in the future.

The remaining question pertains to shared experiences. Most depictions of people using the Vision Pro show them alone. Even though I live alone, I enjoy watching movies with friends or family at home. What I’m suggesting here is that while this device appears to be designed for a highly individual immersive experience, it’s unlikely to replace shared viewing experiences on traditional platforms like your home TV.

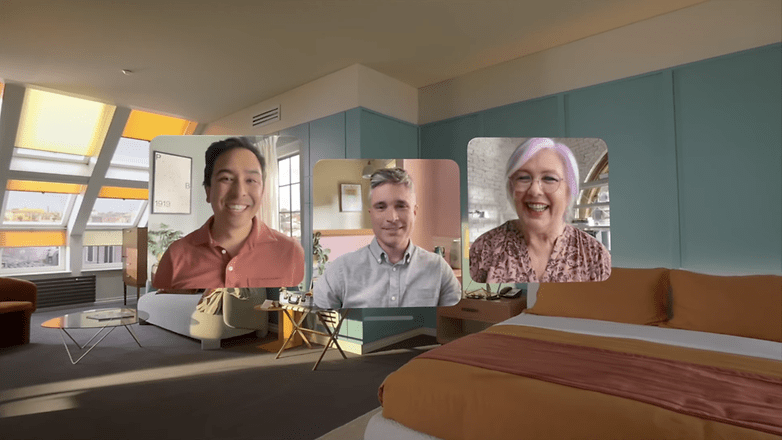

Social

FaceTime plays a significant role in Apple’s ecosystem, and its functionality has been elevated to new heights with visionOS. Firstly, FaceTime calls now exploit the surrounding environment, extending beyond the device’s display to virtually surround us with the images of those with whom we’re conversing. This is the essence of virtual reality, isn’t it?

Apple pushed boundaries even further by designing avatars for those wearing Vision Pro, going beyond mere Memojis. These so-called “Personas” are digital representations of the users, created using advanced machine learning techniques capable of mirroring facial and hand movements in real time. More on that in the hardware section.

Additionally, it’s possible to share experiences, such as watching a movie, browsing photos, or collaborating on a presentation during FaceTime calls.

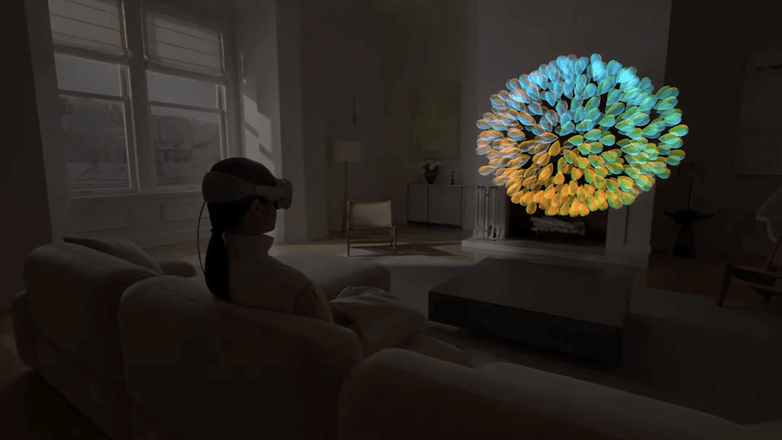

Health

In addition to productivity, entertainment, and social connections, another prominent use case for Vision Pro lies in the health sector, a key category for Apple. Leveraging a comprehensive catalog of mindful practices and workouts available on Fitness+, Apple has developed a robust mixed-reality platform for subscribers of the fitness service.

The blending of realities allows workouts to expand into a three-dimensional environment, offering a more immersive experience during meditation sessions or a vivid, motivational running session on the treadmill. However, I believe that this latter scenario would necessitate an additional seal to protect the device during intense, sweat-inducing moments.

Apple Vision Pro hardware specifications

Build quality

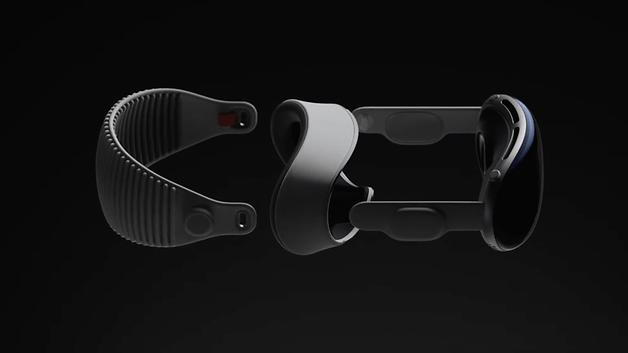

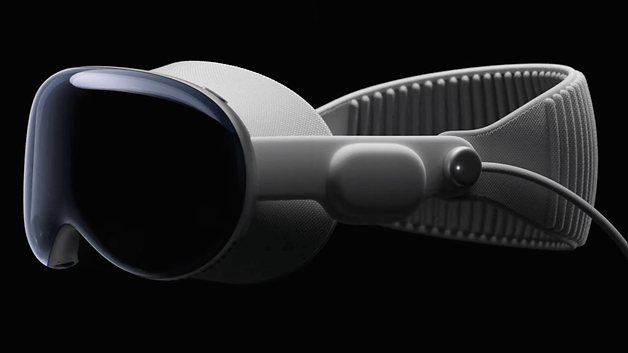

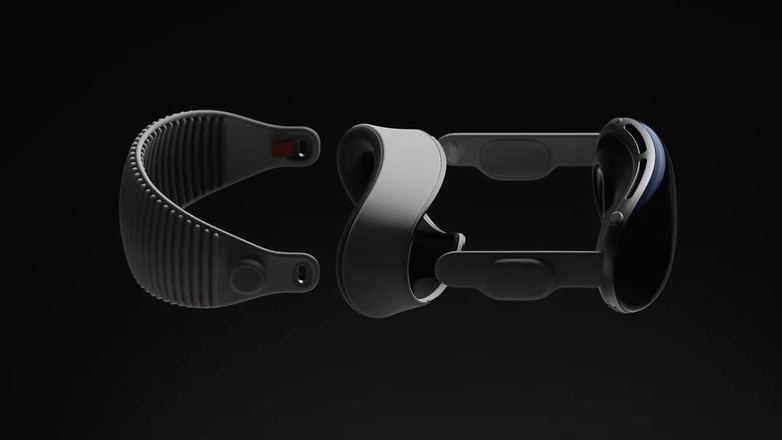

Mixed reality is largely about how quickly the hardware can process images and commands with a comfortable design solution. If a headset only meets one of these requirements, it can be considered unbalanced. That being said, Apple engineers designed the Vision Pro with a primary focus on flexibility. The device can essentially be broken down into four main components: the lenses, a seal, a headband, and a battery pack.

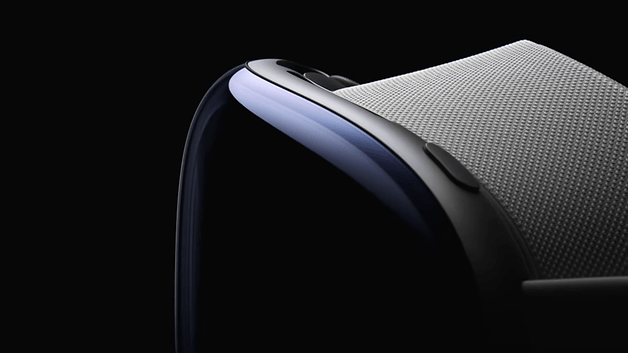

- The lenses: The first component of this modular system is a single, unified piece comprised of a glass lens and an aluminum alloy frame. This structure houses all the internal hardware, including the chipset, sensors, and cameras.

- The light seal: The purpose of the light seal is indeed to keep unwanted light from reaching the eyes and to eliminate any potential distractions that might occur from the peripheral viewpoint. By providing a more focused visual experience, it enhances the user’s immersion in the mixed-reality environment.

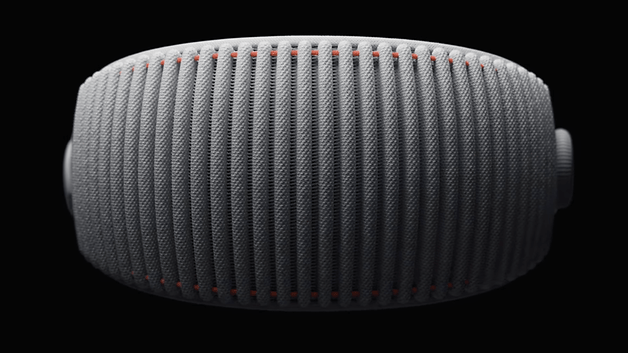

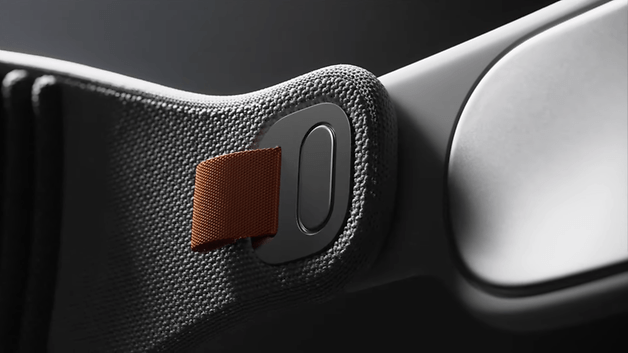

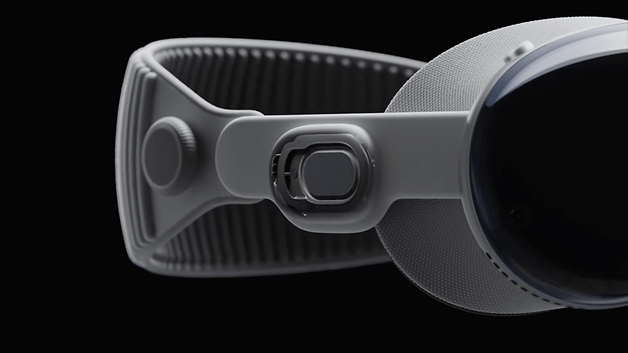

- The headband: The headband of the Vision Pro is designed with flexibility in mind. It’s constructed from a knit fabric, providing a cushioned, breathable, and stretchable solution.

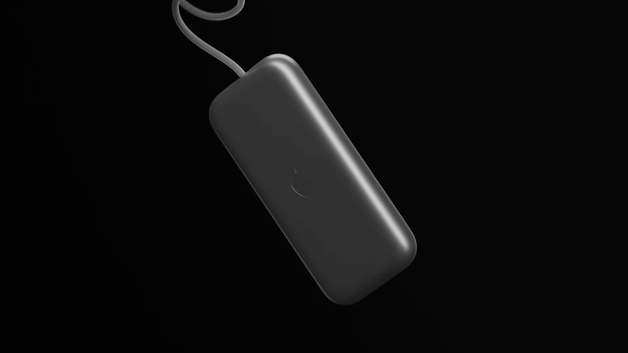

- The battery pack: Another key part of this modular system is the battery pack, which attaches to the headset using a supple woven cable—still not confirmed as a MagSafe connection though. However, this component does require some kind of pocket or belt to secure it while the Vision Pro is being used. This practical design allows for extended use without adding unnecessary weight to the headset.

Our impression of this approach is very positive. Modular technology always offers a more personalized experience and provides flexibility when it comes to swapping parts. For instance, the battery design can facilitate extended use of the device, as it is replaceable. This means you can easily switch to a charged battery while a second pack is recharging.

Moreover, the fitting mechanisms are evidently inspired by the design of the Apple Watch Series, and this influence can also be seen in the glasses and the entire aluminum structure—from the frame to the top button to capture photos and videos, down to the digital crown that zooms in and out. Even the adjustment dial incorporated in the headband shows this inspiration. Personally, I find it to be an intelligent and consistent design choice.

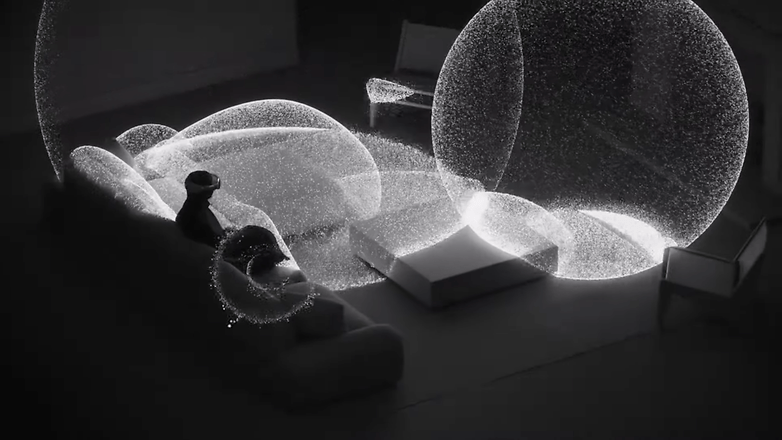

Speakers and Spatial Audio

Located on the stem of the Vision Pro, we have dual-driver audio pods strategically positioned to be near the ears of the wearer. A significant development in audio technology borrowed from the AirPods has been incorporated: Spatial audio. In our review of the AirPods Pro 2, we noted that the spatialization is excellent, resulting in a much more immersive sound experience. This also greatly enhances the viewing experience for compatible movies and series.

So, what does Spatial Audio actually do? Imagine you’re wearing headphones. The sound that reaches your ear inside the headphones is quite different from the original sound because it gets bent and twisted, much like light in a funhouse mirror, by your ears and your head. Normally, this bending helps us figure out where a sound is coming from. But headphones don’t play nicely with this because the sound is coming directly from the speakers on or in your ear.

Now, scientists have a fancy way of dealing with this called a Head-Related Transfer Function (HRTF). It’s a fancy way of saying that they use math to imitate what your head and ears do to sounds. But there’s a challenge: everyone’s head and ears are different!

That’s where Apple’s Spatial Audio comes in. Starting with iOS 16, Apple allows you to create your very own HRTF by scanning your head with your iPhone’s Face ID camera. This means that the spatial audio of your Vision Pro headphones can be tailored perfectly to you. It might even be possible to do this scan directly with the headset itself.

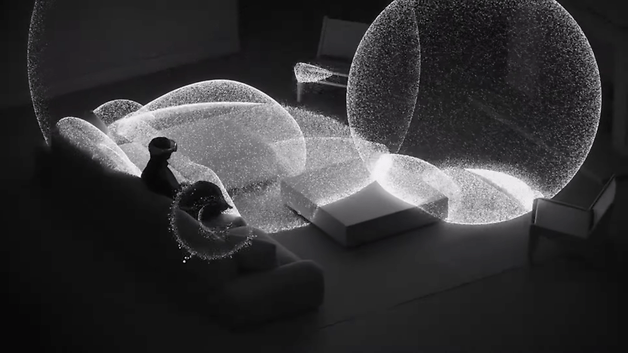

The Vision Pro also possesses audio raytracing capabilities. These use the built-in sensors to interpret the materials and objects in the room, creating an impression that sound is emanating from the surrounding environment. Furthermore, it employs 3D mapping to understand the positions of walls, furniture, and people, enhancing the perception that sounds and imagery exist physically within the room.

Displays, chipsets, cameras, sensors, and tracking

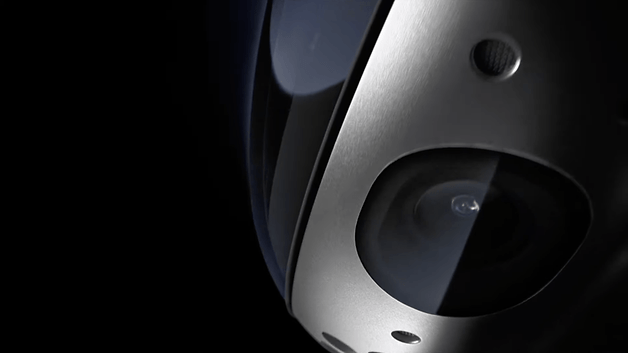

Apple has stated that in the nearly nine years of developing the Vision Pro, they’ve patented more than 5,000 technologies. This is an impressive achievement. Some of these custom technologies are directly built into the lenses.

Displays

Let’s start with the display. Apple utilizes a micro OLED display system (not to be mistaken with micro LED), which, according to the company, fits 64 pixels into the space traditionally occupied by a single iPhone pixel. This equates to packing 23 million pixels into two panels, roughly the size of a small matchbox. That’s a staggering number of pixels to perceive. In fact, it’s more than the pixel count of a 4K TV for each eye.

This custom three-element lens enables video rendering at true 4K resolution, supporting wide color and high dynamic range (HDR). Apple used specialized catadioptric lenses and supplemented them with Zeiss optical inserts for necessary vision correction, which magnetically attach to the lenses.

As you might see in the round-up review section of this guide, Apple has apparently managed to build one of the most advanced displays among the VR goggles currently available.

Chipsets

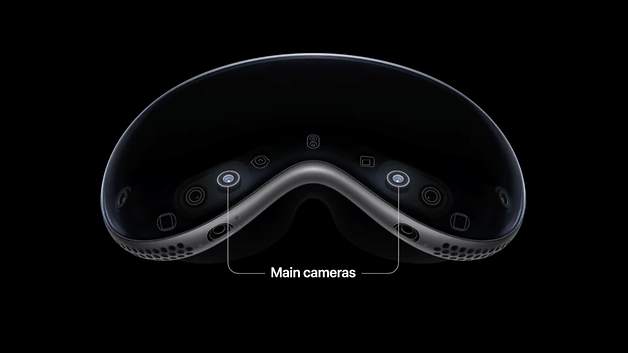

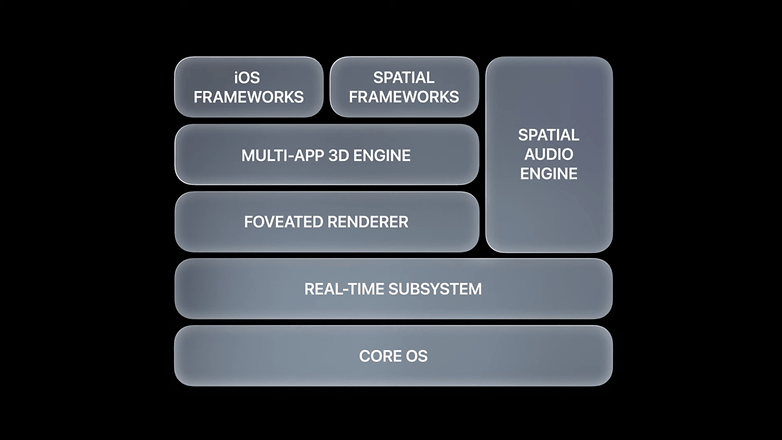

What powers this device? The Vision Pro is driven by Apple’s dual-chip silicon design. The M2 chip ensures the device can operate independently, while the newly debuted R1 chip manages input from an array of 12 cameras, five sensors, and six microphones. This configuration assures a content experience that is as close to real-time as possible, eliminating lags that can cause motion discomfort.

Apple said that the R1 chip can relay new images to the displays in a speedy 12 milliseconds, which is eight times quicker than a blink of a human eye. Regrettably, details about the Vision Pro’s memory and internal storage remain scant as of now.

When it comes to the computational aspects of the Vision Pro, most of the information we have is quite superficial, so we don’t yet fully understand its thermal system’s design or function. We do know that it’s “cool” and “quiet”.

Given that this device represents advanced computing technology, its processing power is likely substantial, which implies a significant amount of heat being generated close to the wearer’s face. My only hope is that it will not easily overheat.

Here, I think one of the cleverest choices Apple made was to include a separate battery pack. This not only results in a lighter headset but also prevents the battery cell from overheating while plugged in.

Real-time camera and sensor systems

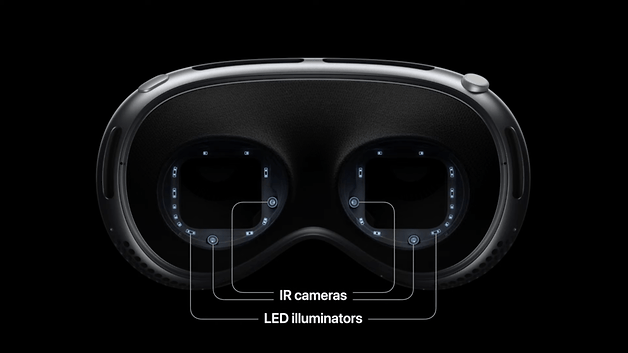

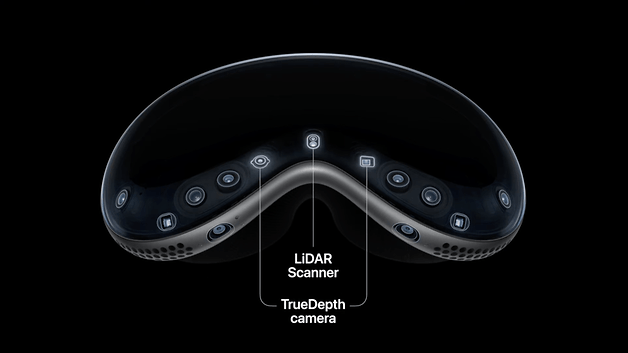

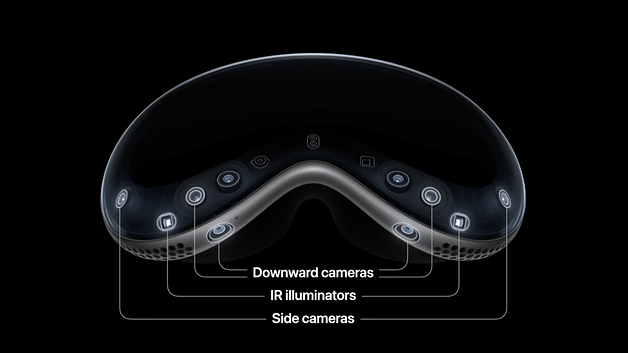

The sensor array system feeds high-resolution video to the displays, utilizing two primary cameras located at the bottom-front of the lenses. These 3D cameras enable a more comprehensive depth of field. With the assistance of downward and side cameras, along with IR illuminators, precise head and hand tracking is achievable. Employing a LiDAR scanner and a True Depth camera, the Vision Pro performs real-time 3D mapping and more.

Furthermore, within the Vision Pro, a high-performance eye tracking system utilizes high-speed IR cameras and LED illuminators to project invisible light patterns onto the eyes, ensuring responsive and intuitive input. This system is responsible for eye and hand tracking, eliminating the need for hardware controllers.

EyeSight

A notable feature of Apple’s Vision Pro is EyeSight, designed to enhance your interaction with others while using the headset. Here’s how it works: suppose you’re immersed in the Vision Pro experience and someone approaches you. The device automatically turns “transparent”, allowing you to see the person, while the goggles display an image of your eyes.

When you’re completely engaged in an application or your virtual surroundings, EyeSight also provides a visual cue to others about your point of focus. Just remember that the eyes on the display aren’t actually your real eyes, but a projection of them in the lenticular lens of the OLED panel. Some people believe this makes the device a bit dystopian.

Machine Learning

As mentioned, Apple employs Machine Learning (ML) to create “Personas,” which are digital representations of users that mirror their facial and hand movements in real-time. As far as I understand, this can be achieved using the front sensors on the headset itself.

Much like how Face ID scans and creates a 3D image of your face, the same technique is utilized in combination with machine learning to generate a Persona. According to Apple, this process employs an advanced encoder-decoder neural network and has been trained on a diverse set of thousands of individuals to provide a more natural representation of you.

When it comes to tracking the movements of your face and hands during, say, a call, the array of cameras and sensors both inside and outside the headset work to replicate your movements in real-time. This includes finer details like eye and lip movements, as well as muscle contractions. In other words, these Personas possess volume and depth thanks to these techniques.

Despite this being a complex amalgamation of advanced techniques, it is important to remember that this is still a representation, not a perfect replication of the user’s movements.

Privacy and Security

As mentioned at the beginning of this Vision Pro guide, Apple provides a well-known authentication system to secure your data, called Optic ID. This system uses the user’s iris structure to unlock the headset.

Like Face ID, Optic ID is encrypted on the device and accessible only to the Secure Enclave processor. With Optic ID, you can use features like Apple Pay and Password AutoFill. It’s also a requirement for making purchases on the App Store.

Another key privacy feature that considers your individuality is related to eye input. Since it’s one of the tracking mechanisms of the Vision Pro, eye input is isolated to a separate background process, so apps and websites can’t track your gaze. Results are communicated only when you tap your fingers, much like a mouse click or tap.

Camera data is processed at the system level, which means individual apps cannot access your surroundings.

Current software version: visionOS

The Apple Vision Pro runs visionOS out of the box. Although this is a new operating system, it is expected to follow Apple’s standard software update protocols, with annual new versions being released to introduce new features and security patches. We’ll have additional information on this when we get to test the device in 2024.

Apple Vision Pro roundup reviews

Right after the WWDC event, in 2023, some journalists and tech influencers had the chance to do a demo with the Apple Vision Pro. Here are some of the most interesting characteristics mentioned in these first impressions from the heads of channels like CNET and The Verge, as well as YouTube channel influencers such as MKBHD, Sara Dietschy, and Brian Tong.

- The Vision Pro isn’t light: Apple hasn’t yet revealed the official dimensions and weight of the headset. The product may also undergo some changes from what we saw presented during the WWDC 2023 keynote. However, it’s quite unanimous that this is not a lightweight device and its balance is off due to the external battery pack (half a kilogram is the weight suggested by Nilay Patel, the editor-in-chief of The Verge). An additional headband over the head will be included for enhanced comfort, but unfortunately, it doesn’t mitigate the heavy sensation created by the headset on the front of the face in its current form.

- It boasts one of the best image qualities ever experienced in an MR device: The image quality experience was also unanimously praised by everyone who demoed the device. Some of them—like Brian Tong—were particularly impressed by the resolution of the display.

- The Personas feature is an interesting touch: Although the Personas didn’t quite match expectations (apparently), they managed to avoid triggering the Uncanny Valley sensation. For some, even though it initially felt unnatural, as the FaceTime call progressed, they found themselves paying less attention to it, and it began to feel more natural. Personally, I’m really pleased that the Personas lean more toward a realistic projection than an avatar, similar to Meta’s solution.

- The visionOS is remarkable: Software-wise, they all agree that Apple apparently did an outstanding job. While these experiences were part of a demo, we are all expecting the actual user experience to closely match what Apple has presented, right?

- However, a mere two hours of battery life isn’t impressive: Once again, the runtime was an aspect where everyone had higher expectations. Although the external battery pack allows for the interchange of batteries to extend usage time, the device’s runtime still falls short. This also adds to the cost of an already expensive device.

Apple Vision Pro price and availability

The Apple Vision Pro is now available for pre-order, with sales kicking off in the United States on February 2, and gradually rolling out to international markets throughout the year. Priced at $3,499, it introduces an innovative sizing feature—just use Face ID on your iPhone or iPad to determine your perfect fit.

In addition, a range of optical inserts is on offer, with choices including Zeiss lenses, starting at $99 for reading glasses and $149 for prescription lenses. The pricing also varies based on the storage capacity:

- 256GB model priced at $3,499

- 512GB variant available for $3,699

- 1TB option at $3,899

Apple offers a compelling financing deal with the Apple Card Monthly Installments, featuring 0% APR. Additionally, you’ll get 3% daily cash back on the entire purchase of your Apple Vision Pro.

For extra security, you might want to look into AppleCare+ at an additional cost of $499.00, or choose a monthly plan for $24.99. This plan covers unlimited accidental damage repairs, provides Apple-certified service and support, and ensures express replacement to reduce any repair-related downtime.

Is there anything we’ve overlooked? Your suggestions and feedback are highly valued. We’re looking forward to hearing your thoughts and comments!

This article received an update on January 19 to include details about the pre-order program and the official start of sales.