Apple was recently granted a patent for ‘EyeSight’, the external display on Vision Pro which shows the wearer’s eyes. The patent was filed way back in 2017 and envisioned the feature being used to show stylized eyes like those of anime and furry characters.

EyeSight is perhaps the most unique bit of hardware on Vision Pro. Apple has been sitting on the idea for the better part of six years, having first filed a provisional application for the idea in June of 2017. After filing the full application a year later, Apple was just granted the patent this month, formally titled Wearable Device for Facilitating Enhanced Interaction.

When a company files a patent, it generally aims to make the patent as broad as possible to maximize protection of its intellectual property. To that end, the patent envisions a wide range of use-cases that go beyond what we see on Vision Pro today.

When a company files a patent, it generally aims to make the patent as broad as possible to maximize protection of its intellectual property. To that end, the patent envisions a wide range of use-cases that go beyond what we see on Vision Pro today.

At launch, Vision Pro’s EyeSight display shows a virtual representation of the wearer’s eyes, but Apple also envisioned stylistic representations of the wearer, including anime eyes, the eyes of a furry avatar, and even augmenting the wearer’s eyes with various graphics.

Apple imagined an even wider range of uses than just showing the person inside the headset, like showing the weather or a representation of the content the user is seeing inside.

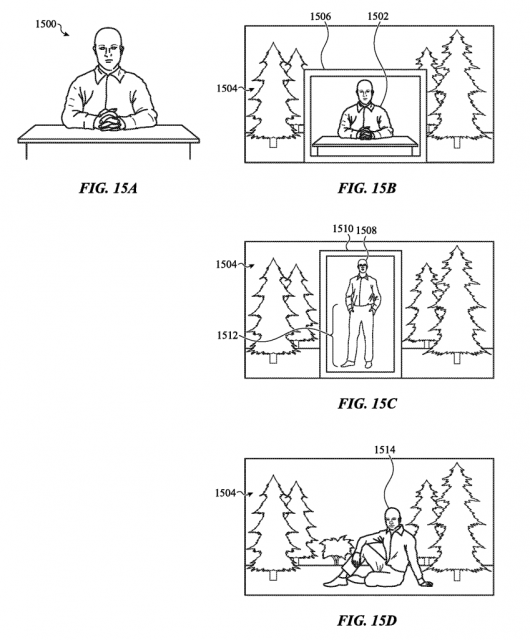

The patent also covers the ‘breakthrough’ feature of Vision Pro, where Vision Pro detects people outside of the headset then fades them into view inside the headset so they can be seen by the wearer. While the feature currently shows the outside person in sort of a faded-in view, Apple also envisions showing them in a window with hard edges, or even placing them seamlessly into the virtual environment.

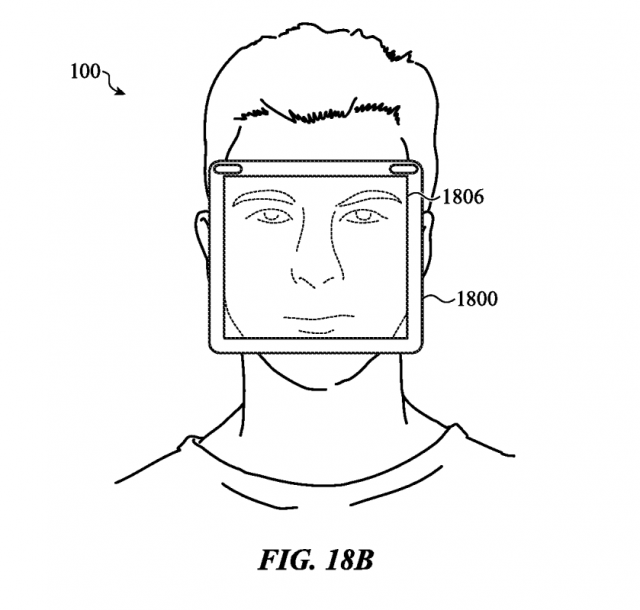

The patent also imagines a hilarious looking ‘FaceSight’ version of EyeSight which would use a display large enough to show the wearer’s entire face.

The patent also imagines a hilarious looking ‘FaceSight’ version of EyeSight which would use a display large enough to show the wearer’s entire face.

Patents like this can be interesting because sometimes these unrealized concepts will make their way into future versions of the product. One interesting bit that stood out to me in the patent described the system as detecting “whether the observer matches a known contact of the wearer,” and using that info to decide what to show on the display. As of now the headset does detect when people are near you, but doesn’t analyze who they are or if you know them.

Patents like this can be interesting because sometimes these unrealized concepts will make their way into future versions of the product. One interesting bit that stood out to me in the patent described the system as detecting “whether the observer matches a known contact of the wearer,” and using that info to decide what to show on the display. As of now the headset does detect when people are near you, but doesn’t analyze who they are or if you know them.

Thanks to Collin B. on Twitter for the tip