The Quest v64 update brought two undocumented major new features.

You’d generally think that a changelog for the system software of a consumer electronics device would include all major new functionality introduced, but with Meta that isn’t always the case.

Version 64, released earlier this month, officially brought improved passthrough quality, external mic support, and lying down mode to Quest 3, and made casting no longer end when the headset is taken off.

But Quest power users have noticed v64 also brings two major features not mentioned in the changelog: furniture recognition on Quest 3 and simultaneous hand tracking and Touch Pro or Touch Plus controllers in the home space.

Furniture Recognition On Quest 3

Quest 3 generates a 3D mesh of your room during mixed reality setup, and could always infer the positions of your walls, floor, and ceiling from this 3D mesh. But until v64 the headset didn’t know which shapes within this mesh represent more specific elements like doors, windows, furniture, and TVs. You could manually mark these out, but that manual requirement meant developers couldn’t rely on users having done so.

With v64 though, at the end of mixed reality room scanning Quest 3 now creates a labeled rectangular cuboid bounding box around:

- Doors

- Windows

- Beds

- Tables

- Sofas

- Storage (cabinets, shelves, etc)

- Screens (TVs and monitors)

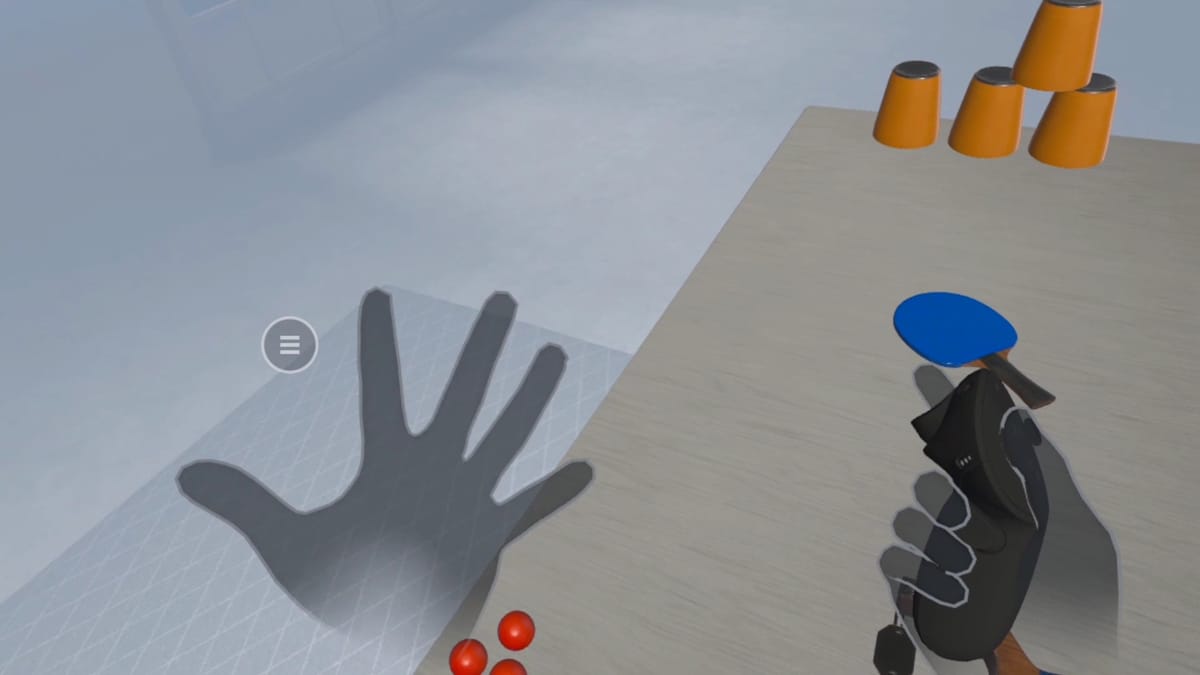

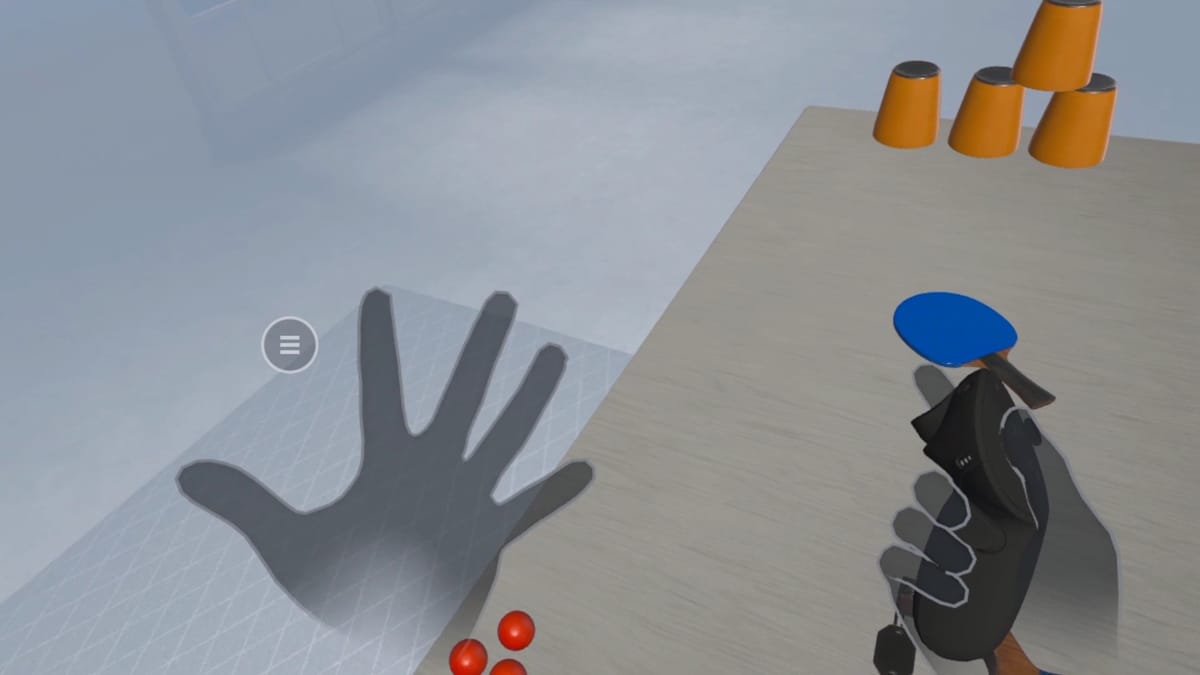

Quest 3 v64 furniture recognition footage from Squashi9.

Quest developers can access these bounding boxes using Meta’s Scene API and use them to automatically place virtual content. For example, they could place a tabletop gameboard on the largest table in the room, replace your windows with portals, or depict your TV in a fully VR game so you don’t punch it.

Apple Vision Pro already gives developers a series of crude 2D rectangles representing the surface of seats and tables, but doesn’t yet provide a 3D bounding box. The RoomPlan API on iPhone Pro can do 3D bounds, but that API isn’t available in visionOS.

The new Quest 3 functionality was first publicly noticed by X user Squashi9, as far as we can tell. UploadVR tested v64 on Quest 3 and found this feature works very well for most object categories, except for Storage which often creates inaccurate bounds.

Quest 3 v64 furniture recognition footage from VR enthusiast Luna.

You may be thinking this sounds a lot like the SceneScript research Meta showed off last month. But the furniture recognition on Quest 3 in v64 is far more crude than SceneScript. For a sofa, for example, v64 creates a single simple rectangular cuboid encapsulating it, while SceneScript generates separate rectangular cuboids for the seat area and arms, as well as a cylinder for the backrest. SceneScript likely requires far more computing power than Quest 3’s current furniture recognition, and Meta presented it solely as research, not a near-term feature.

Simultaneous Hands & Controllers In Home

Since last year Quest developers have been able to experiment with using hand tracking and Quest 3 or Quest Pro controllers simultaneously, and since two months ago they’ve been able to publish apps using this feature to the Quest Store and App Lab. Meta calls this feature Multimodal.

Quest 3 Apps Can Now Use Hands & Controllers Simultaneously

Quest Store & App Lab apps can now use hand tracking + Quest 3 or Quest Pro controllers simultaneously, a feature called Multimodal.

With v64 Multimodal has been added to the Quest home space, both in passthrough and in VR mode. Again though keep in mind it only works with Quest 3 or Quest Pro controllers, so you won’t see this feature on Quest 2 unless you buy the Pro controllers for $300.

Multimodal enables an instantaneous transition between controller tracking and hand tracking, no more delay. It also enables using one controller while still tracking the other hand. That means you can pick up a single controller to navigate the Quest home space, without jank, as you might a TV remote. This could be appealing for web browsing, media watching, and changing settings, offering the precision and tactility of a tracked controller while keeping your other hand free.