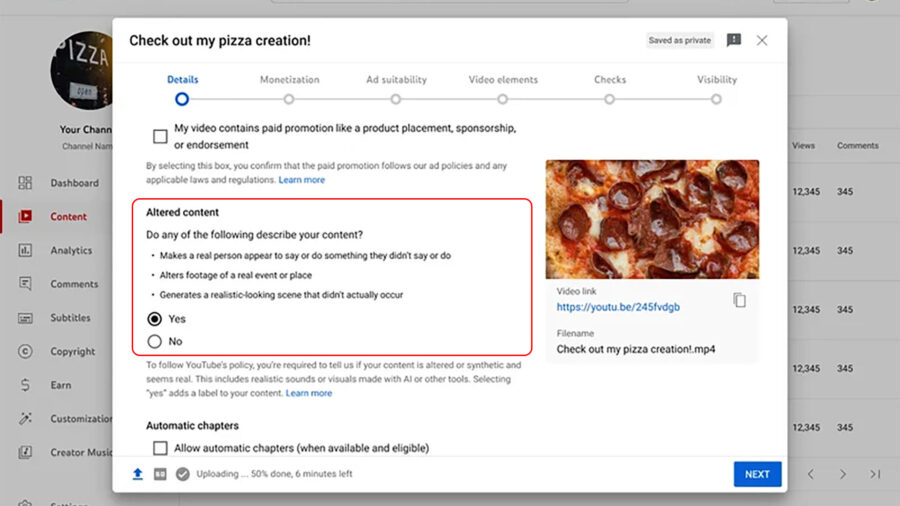

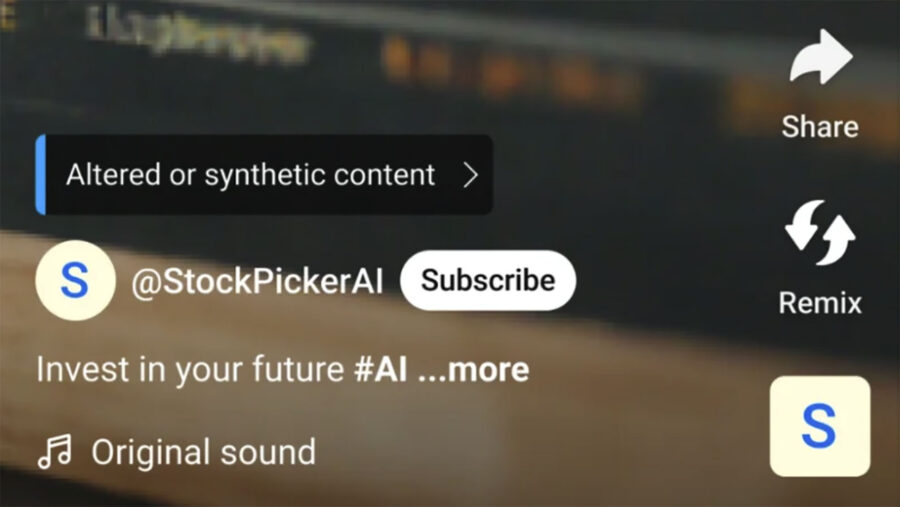

AI-generated content has been on the rise in recent years. As it gains popularity and becomes more accessible, concerns rise. Following their announcement last November regarding responsible AI, YouTube now incorporates a new tool into YouTube Studio. This new feature will enable creators to disclose the less apparent AI use cases, such as voice alteration, face swap, or any AI-generated, realistic-looking scenes. As of now, it’s purely voluntary, based on the creator’s good faith.

As AI-generated content is more widespread than ever, authenticity concerns grow. YouTube now offers a new tool to mitigate some suspicions regarding content. The new labeling tool is voluntary, providing the creators’ community with a chance to build much-requested trust with their audience.

Authenticity is the key

With this new tool, YouTube aims to combat disinformation spread by manipulative use of AI tools. You’ll still be able to post your image riding a dragon or create fantastic landscapes. As long as the end image is deliberately unrealistic, there won’t be any problem in posting it; no AI labeling is needed. YouTube specifies the following use cases in which AI labeling is due:

- Using the likeness of a realistic person: Digitally altering content to replace the face of one individual with another’s or synthetically generating a person’s voice to narrate a video.

- Altering footage of real events or places: Such as making it appear as if a real building caught fire, or altering a real cityscape to make it appear different than it does in reality.

- Generating realistic scenes: Showing a realistic depiction of fictional major events, like a tornado moving toward a real town.

Full coverage isn’t quite there yet

While we should commend YouTube’s move, there are still some major caveats. YouTube specification of AI alterations that won’t require tagging leaves some room for interpretation. “We also won’t require creators to disclose when synthetic media is unrealistic and/or the changes are inconsequential.” Although YouTube continues specifying some use cases, the term “unrealistic” still seems rather subjective. More than this, it’s the voluntary nature of this tool that may be its undoing.

The voluntary dilemma

Most creators will surely be decent and honest regarding the authenticity of their content. The importance of audience-creator trust can’t be overstated. It’s a small percentage of malevolent users that I’m worried about. This system still provides no solution for this, and, to be honest, I’m not sure there is another way to combat it at this point. The current level of AI-generated content will make any algorithm-based solution pretty difficult to achieve, and the consequences of automatic tagging may also pose a problem. A solution may lie in some sort of electronic watermarking, like C2PA, but it requires much more than a technological solution, as all social dilemmas do.

Do you believe such steps may help to combat disinformation and fake news, or are they no more than lip service? Let us know in the comments.